Efficient API-Driven LLM Solution for Real-Time Applications

Magistral Medium 2506 is a powerful large language model developed by Mistral, designed to provide an optimal balance between performance and scalability.

Positioned as a mid-tier model in the Mistral family, it serves as an excellent choice for businesses looking to integrate advanced AI capabilities without incurring the costs associated with flagship models. This model is particularly suited for production environments, real-time applications, and generative AI systems demanding robust performance and rapid response times.

Key Features of Magistral Medium 2506

Latency:

Mistral: Magistral Medium 2506 boasts impressively low latency, making it ideal for real-time interactions and dynamic applications. Developers can rely on its quick processing speed to deliver efficient and responsive user experiences.

Context Size:

With a sizable context window, this model handles extensive text inputs efficiently, enhancing capabilities in tasks like document analysis and dialogue context retention.

Alignment/Safety:

Designed with alignment and safety in mind, Magistral Medium 2506 employs advanced filtering and moderation techniques to ensure outputs are contextually appropriate and adhere to user guidelines.

Reasoning Ability:

The model excels in complex reasoning tasks, enabling accurate decision-making support across various industries.

Language Support:

Supporting multiple languages, Magistral Medium 2506 is adaptable to diverse linguistic requirements, making it a practical solution for international applications.

Coding Skills:

The model's sophisticated understanding of coding languages empowers it to assist in code generation and debugging, greatly benefiting developers and software teams.

Real-Time Readiness and Deployment Flexibility:

Ready for deployment in real-time environments, Magistral Medium 2506 is flexible, accommodating various integration types and operating seamlessly within existing infrastructures.

Developer Experience:

Developers benefit from seamless API integration, detailed documentation, and community support when using Magistral Medium 2506.

Use Cases for Magistral Medium 2506

Chatbots (SaaS, Customer Support):

Integrating Magistral Medium 2506 enables the development of advanced chatbots capable of real-time interactions and personalized customer support across multiple platforms.

Code Generation (IDEs, AI Dev Tools):

The model's coding proficiency makes it an excellent candidate for integration into integrated development environments and AI development tools, aiding in code suggestion, completion, and error correction.

Document Summarization (Legal Tech, Research):

Magistral Medium 2506 is adept at summarizing large volumes of text, providing critical insights for legal tech firms and research institutions alike.

Workflow Automation (Internal Ops, CRM, Product Reports):

Organizations can leverage this model to automate repetitive tasks in workflows, improving efficiency in operations, CRM systems, and product report generation.

Knowledge Base Search (Enterprise Data, Onboarding):

Enhance enterprise data accessibility by employing Magistral Medium 2506 to streamline searches and manage knowledge base queries, facilitating smoother onboarding processes.

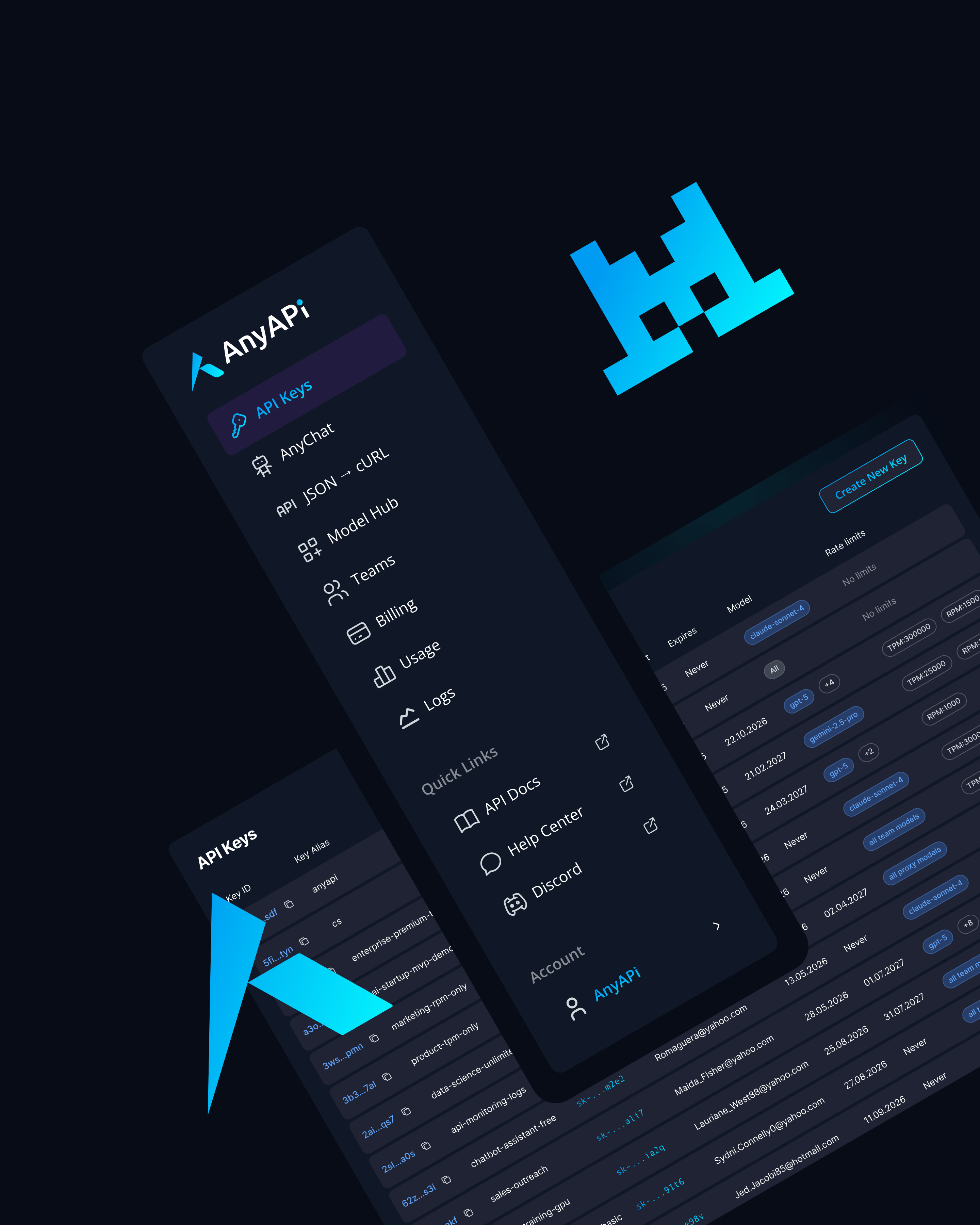

Why Use Magistral Medium 2506 via AnyAPI.ai

Choosing AnyAPI.ai enhances the value of Magistral Medium 2506 by providing a unified API platform that offers seamless access to various LLMs. With one-click onboarding and no vendor lock-in, users benefit from flexible usage-based billing and robust developer tools, ensuring production-grade infrastructure.

Unlike competitors such as OpenRouter and AIMLAPI, AnyAPI.ai provides unrivaled provisioning, unified support, and comprehensive analytics for an optimized development experience.

Start Using Magistral Medium 2506 via API Today

Integrate Magistral Medium 2506 via AnyAPI.ai and start building today. Sign up, get your API key, and launch in minutes to transform your business operations with state-of-the-art language model technology.