Open-Weight, High-Performance LLM for Scalable, Aligned API Access

Llama 3.3 70B Instruct is the instruction-tuned variant of Meta’s powerful 70-billion parameter Llama 3.3 model, designed for high-quality natural language generation, reasoning, and task completion. With an open-weight license and strong alignment, it provides an accessible, production-ready alternative to proprietary LLMs.

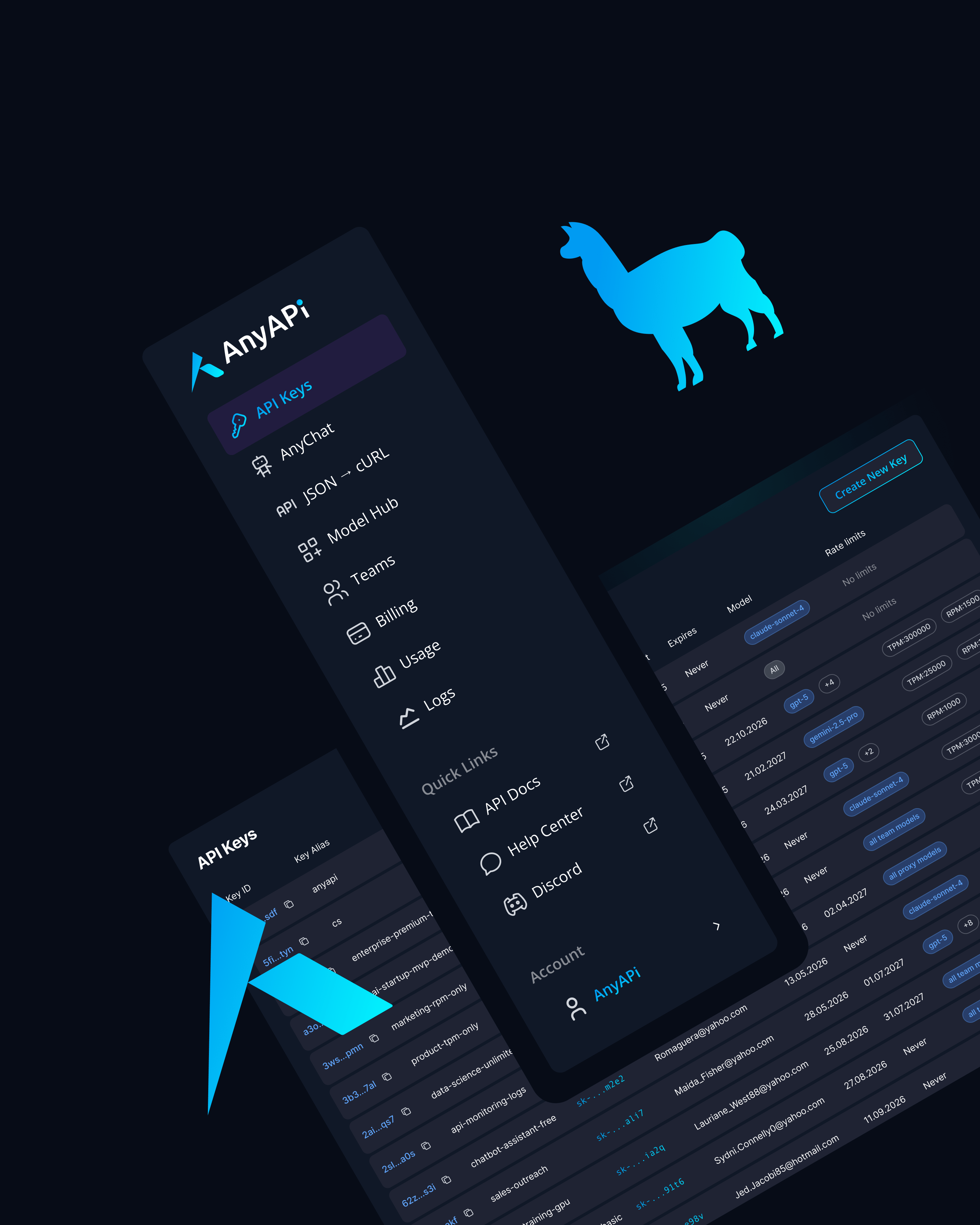

Ideal for developers, startups, and ML teams, Llama 3.3 70B Instruct delivers balanced performance in accuracy, coherence, and safety—accessible via API through platforms like AnyAPI.ai or deployable on-premises for full-stack control.

Key Features of Llama 3.3 70B Instruct

70B Parameter Model

Offers high output fluency and reasoning ability across complex prompts, thanks to its large-scale architecture and instruction-tuned training pipeline.

Open-Weight and Self-Hostable

Available under a permissive Meta license, Llama 3.3 70B can be deployed in private cloud, VPCs, or edge environments, or accessed through AnyAPI.ai for hosted inference.

Instruction-Tuned for Alignment

Fine-tuned to follow structured instructions, format tasks accurately, and generate safe, context-aware outputs across business, education, and development use cases.

Strong Code and Reasoning Support

Performs well on code generation, math, and structured logic tasks, making it suitable for developer tools, assistants, and automation agents.

Multilingual Support

Generates and understands content in 20+ languages, making it viable for international apps and localization workflows.

Use Cases for Llama 3.3 70B Instruct

AI Copilots and Coding Assistants

Deploy Llama 3.3 70B Instruct in dev environments to write code, explain snippets, and assist with debugging in Python, JavaScript, and more.

Internal Knowledge Tools and RAG

Pair with vector databases to enable enterprise-grade retrieval-augmented generation (RAG) systems for support, compliance, or documentation.

Instruction-Following AI Agents

Build structured task agents for scheduling, CRM updates, and email drafting with a reliable understanding of input prompts.

Content Generation for Marketing or Docs

Produce articles, descriptions, summaries, and FAQs at scale, with more control than generic generative models.

Chatbots and Multilingual Interfaces

Use in user-facing chatbots that require consistency, memory, and instruction following in English, Spanish, French, and more.

Why Use Llama 3.3 70B Instruct via AnyAPI.ai

API Access Without Hosting Overhead

Access Llama 3.3 70B Instruct through a fully managed API—no need to spin up your own inference clusters.

Unified API Across Open and Proprietary Models

Compare and switch between Llama, GPT, Claude, and Gemini using one SDK and one billing model.

No Vendor Lock-In

Enjoy the freedom of open weights with the convenience of AnyAPI.ai’s infrastructure.

Usage-Based Billing and Analytics

Track usage, manage tokens, and scale with demand using built-in analytics and transparent pricing.

Superior to OpenRouter or AIMLAPI

AnyAPI.ai offers better provisioning, support, and visibility across all supported LLMs, including Meta’s models.

Start Using Llama 3.3 70B Instruct via AnyAPI.ai

Llama 3.3 70B Instruct is a powerful, aligned, and fully open LLM—ready to power real-world apps at scale.

Integrate Llama 3.3 70B Instruct via AnyAPI.ai and start building reliable AI tools today.

Sign up, get your API key, or deploy it locally with full control.