Scalable Real-Time LLM API Access with Qwen3 235B A22B Instruct 2507

Qwen3 235B A22B Instruct 2507 is a state-of-the-art large language model (LLM) developed by Qwen. This model is the leading edge in AI technologies and is designed to give real-time and high-performance capabilities to developers and businesses who want to advance their generative AI systems. As a middle-tier model, Qwen: Qwen3 235B A22B Instruct 2507 is intelligently designed with strong features, making it suitable for all types of applications, from production environments to real-time applications. This model is impressive as it can handle substantial data demands without sacrificing speed or efficiency, hence it presents a viable alternative for those looking to integrate AI-powered features into their products seamlessly.

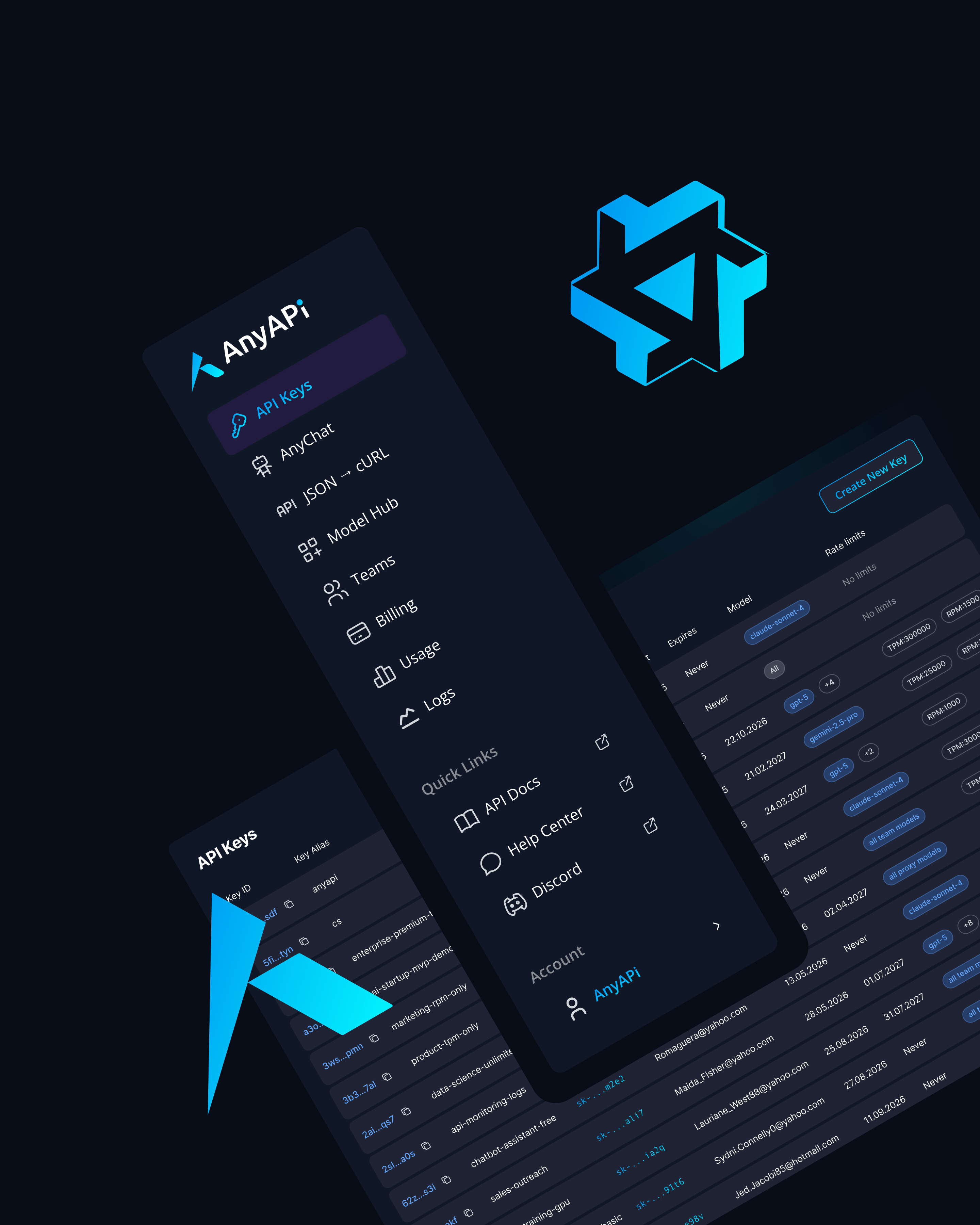

Why Use Qwen3 235B A22B Instruct 2507 via AnyAPI.ai

AnyAPI.ai enhances the utility of Qwen3 235B A22B Instruct 2507 because it provides one unified API interface to access multiple models. It offers one-click onboarding without vendor lock-in for flexibility, ease of use, and integration. The usage-based billing model ensures you only pay for what you use-be it for startups or big teams. Besides, AnyAPI.ai provides comprehensive developer tools and production-grade infrastructure unlike OpenRouter or AIMLAPI, making it the best choice if you want robust analytics and support.

Start Using Qwen3 235B A22B Instruct 2507 via API Today

You can integrate Qwen3 235B A22B Instruct 2507 through AnyAPI.ai and start building innovative applications in minutes. Just sign up, get your API key, and get going.

Whether you're an innovative startup developing in AI, a developer crafting powerful tools with AI, or a team that needs to upscale using AI, Qwen is the best fit for your needs.