Discover the Power of Mistral: Mistral Embed 2312 - The Scalable, Real-Time LLM API for Next-Gen Applications

Unleash the Power of Mistral Embed 2312 - The Scalable, Real-time LLM API for Next-Generation ApplicationsMistral: Mistral Embed 2312 is a dynamic mid-tier language model developed to empower developers with extended capabilities to embed sophisticated AI into their applications. Among the unique selling points are scalability, real-time readiness, and flexibility, making it a must-have tool for production use in generative AI systems, real-world applications, and many others. It positions itself in the Mistral family between entry-level models and high-end behemoths, maintaining a very efficient balance between performance and cost-effectiveness.

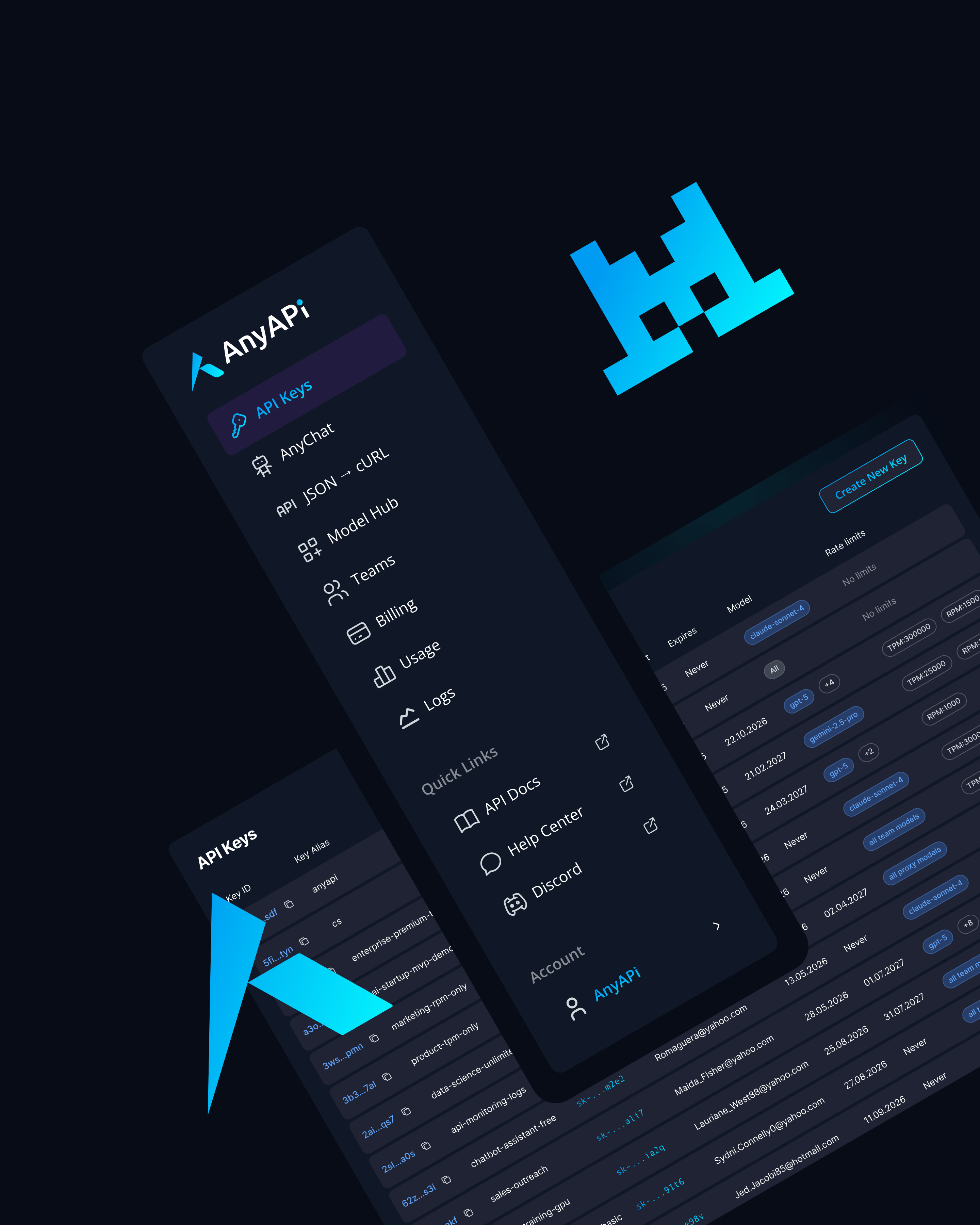

Why Use Mistral Embed 2312 via AnyAPI.ai

AnyAPI.ai amplifies Mistral's power: Mistral Embed 2312, providing a single API experience. Enjoy one-click onboarding without any vendor lock-in, and usage-based billing makes it financially sustainable for startups and developers. Comprehensive developer tools and production-grade infrastructure set it apart from platforms like OpenRouter and AIMLAPI by providing superior provisioning, unified access, support, and analytics.

Start Using Mistral Embed 2312 via API Today

Onboard Mistral Embed 2312 through AnyAPI.ai and start building next-generation applications today. With its powerful features, scalability, and real-time capabilities, Mistral Embed 2312 is perfectly poised to take care of the needs of startups, developers, and enterprise teams. Just create an account on AnyAPI.ai, get your API key, and launch your innovative project in just a few minutes.