Scalable, Real-Time API Access for Dynamic AI Solutions

Codestral 2508 is an advanced large language model (LLM) developed by the skilled minds at Mistral, known for their innovations in AI technology. Positioned as a mid-tier, highly efficient model, Codestral 2508 is built to meet the demands of real-time applications and generative AI systems. Its design specifically caters to developers aiming to integrate potent LLM capabilities seamlessly into their projects, offering a practical solution for production environments.

With its focus on balancing performance and accessibility, Codestral 2508 presents a compelling choice for startups and technology integrators looking to scale AI-based products without the complexities of flagship models.

Key Features of Codestral 2508

Low Latency

Codestral 2508 boasts impressive low latency performance, ensuring that developers can deliver real-time interactions within their applications. This feature is crucial for maintaining seamless user experiences in fast-paced environments.

Extended Context Size

A hallmark of Codestral 2508 is its substantial context window, accommodating a greater number of tokens and thus supporting complex interactions and dialogues without losing context. This feature enhances its utility in applications demanding nuanced understanding and continuity.

Advanced Alignment and Safety

Prioritizing user safety, Codestral 2508 leverages advanced alignment protocols to deliver accurate and contextually relevant responses while minimizing risks associated with AI misalignment.

Comprehensive Language and Coding Skills

With support for a broad range of languages, Codestral 2508 enables developers to extend their applications to global markets with ease. Its adept coding capabilities further enhance its utility for generating efficient and reliable code snippets across various IDEs and development tools.

Deployment Flexibility

Designed for versatility, Codestral 2508 can be integrated via RESTful APIs or Python SDKs, providing flexibility in deployment to suit varied infrastructure needs. This adaptability is pivotal for teams looking to implement solutions quickly and effectively.

Use Cases for Codestral 2508

Chatbots

Codestral 2508 excels at powering chatbots for SaaS and customer support environments, offering intelligent, intuitive interaction that elevates user satisfaction and operational efficiency.

Code Generation

For developers utilizing integrated development environments (IDEs) or AI development tools, Codestral 2508 provides robust code generation capabilities, streamlining the coding process with precision and creativity.

Document Summarization

In legal tech and research fields, Codestral 2508 can be a game-changer, efficiently summarizing lengthy documents to distill essential information, aiding quick decision-making and knowledge acquisition.

Workflow Automation

Internal operations and CRM systems can significantly benefit from Codestral 2508's ability to automate workflows, from generating insightful product reports to managing customer data seamlessly.

Knowledge Base Search

Equip your enterprise with enhanced search capabilities; Codestral 2508 deftly navigates extensive data libraries to expedite onboarding and unlock valuable insights from your knowledge base.

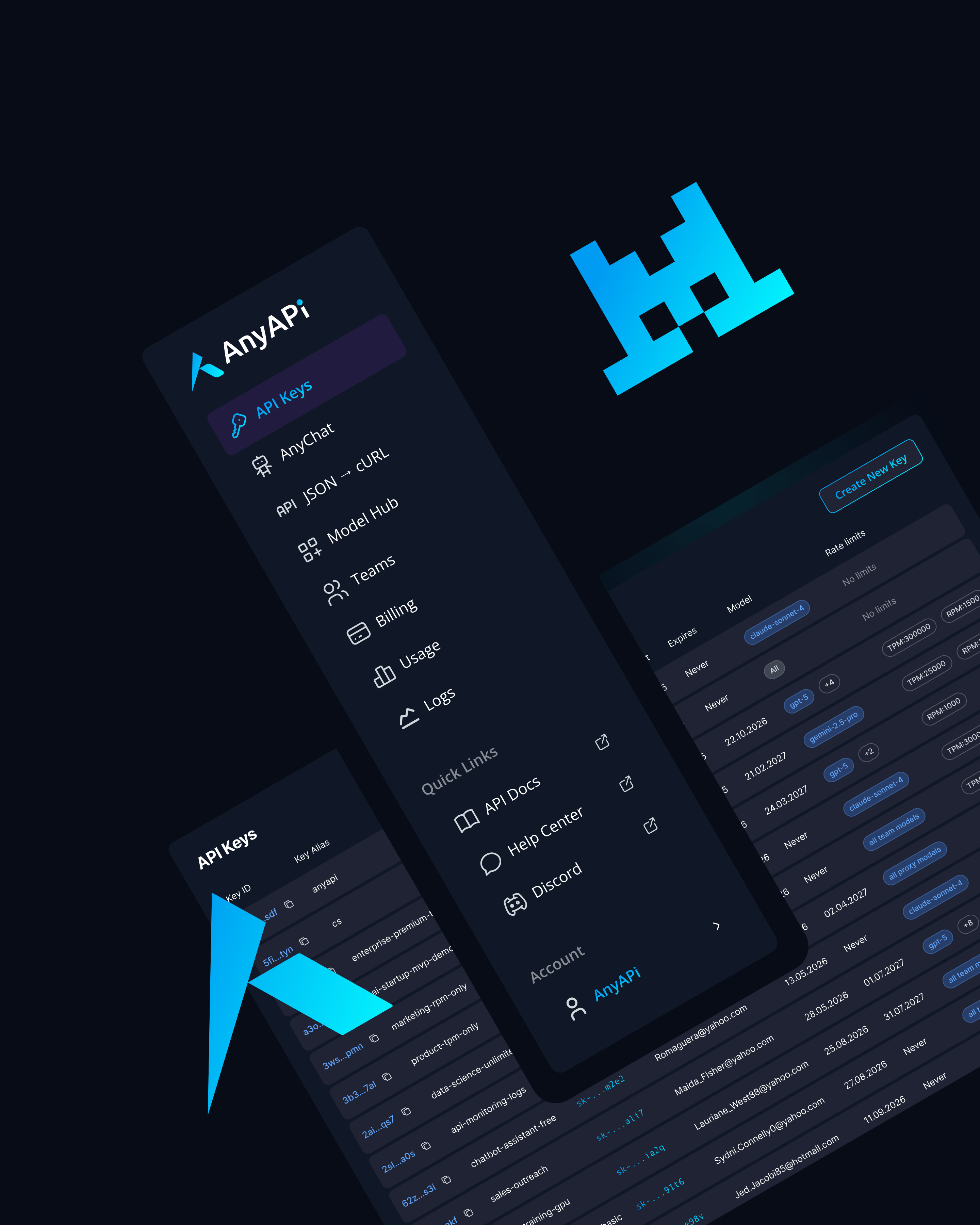

Why Use Codestral 2508 via AnyAPI.ai

AnyAPI.ai enhances the utility of Codestral 2508 with its unified API system, offering a singular point of access to multiple models.

This integration streamlines the onboarding process with a single click and does away with vendor lock-in worries. Furthermore, its usage-based billing model provides economical scaling as your needs grow. AnyAPI.ai also offers an array of developer tools and infrastructure support, distinguishing it from alternatives like OpenRouter and AIMLAPI.

Start Using Codestral 2508 via AnyAPI.ai Today

Codestral 2508 represents an optimal balance between performance and accessibility for startups and developers. Its capabilities can be seamlessly integrated into your projects today via AnyAPI.ai.

Sign up to get your API key and launch advanced AI functionalities in a matter of minutes.