Redefining Real-Time LLM Capabilities through Access and Integration

Meet 'Kimi K2 0711', the game-changing language model created by MoonshotAI to supercharge real-time applications and generative AI systems. As a mid-tier offering in the MoonshotAI lineup, Kimi K2 0711 strikes an impressive balance between performance, scalability, and cost-efficiency.

Purpose-built for production environments, it empowers developers to seamlessly weave large language models into their applications, delivering immediate, powerful solutions for real-time processing demands.

Why Use Kimi K2 0711 via AnyAPI.ai

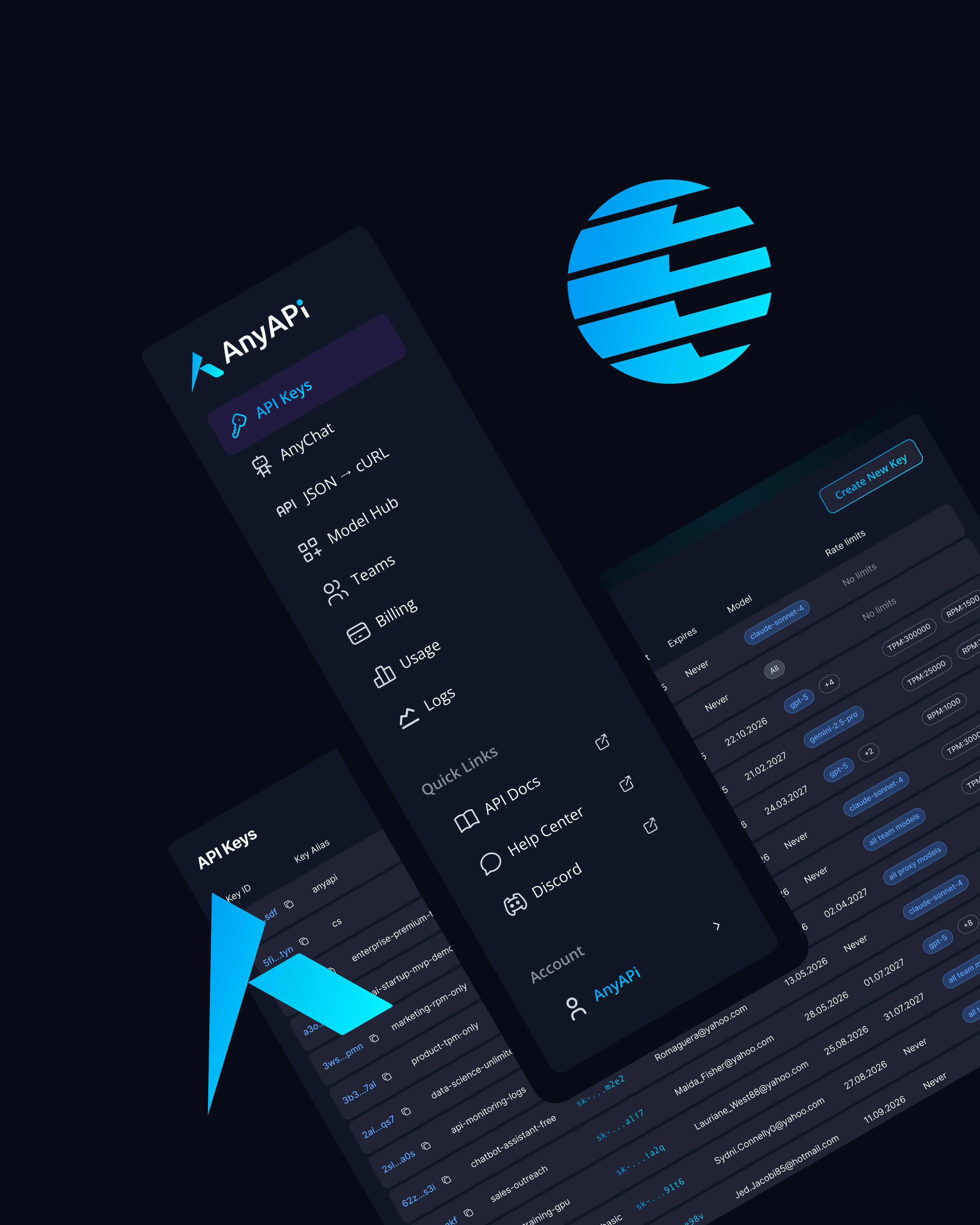

Unified Access and Tools AnyAPI.ai provides unified API access to 'MoonshotAI: Kimi K2 0711' alongside numerous other models, making integration and model-switching effortless as your needs grow—all without vendor lock-in.

Streamlined Onboarding Experience one-click onboarding and usage-based billing, so you only pay for what you actually use, while tapping into advanced developer tools and production-grade infrastructure.

Insights and Support Unlike platforms such as OpenRouter or AIMLAPI, AnyAPI.ai brings you enhanced provisioning, unified access, and comprehensive analytics specifically designed for your AI integration journey.

Start Using Kimi K2 0711 via API Today

Power up your startup, development team, or enterprise system with 'Kimi K2 0711', the top choice for smooth AI integration. Leverage AnyAPI.ai to integrate this robust model quickly.

Sign up, grab your API key, and launch in minutes. Start building with 'Kimi K2 0711' today and revolutionize the way you innovate.