High-Accuracy LLM for Deep Reasoning and Enterprise-Scale API Access

Claude 4 Opus is the most advanced model in Anthropic’s Claude 4 series, delivering state-of-the-art reasoning, long-context comprehension, and alignment for enterprise and mission-critical use cases. Built on Anthropic’s Constitutional AI framework, Opus is optimized for accuracy, safety, and high performance across complex workflows.

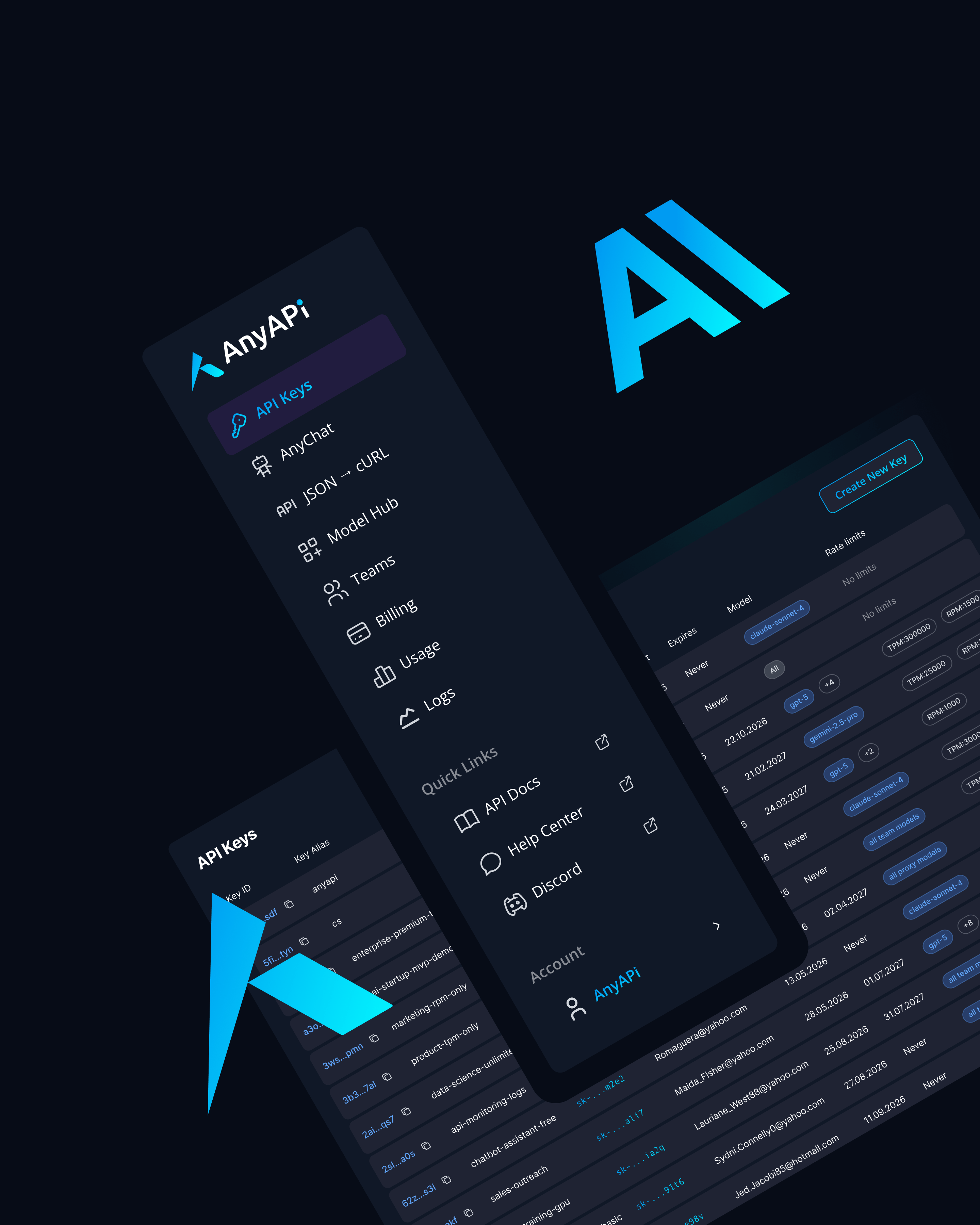

As the flagship Claude model, Opus is ideal for regulated industries, AI copilots, research tools, and sophisticated RAG systems. It is accessible via API and available through AnyAPI.ai for frictionless integration.

Key Features of Claude 4 Opus

Up to 1 Million Tokens of Context

Claude 4 Opus supports a default 200k token context, with expanded access to 1 million tokens—ideal for large document understanding, entire codebase ingestion, or cross-document reasoning.

Best-in-Class Reasoning and Math

Opus ranks among the highest on academic and real-world reasoning benchmarks. It can follow logical chains, handle nested tasks, and explain technical content clearly.

Superior Instruction Following

Trained to understand structured prompts, workflows, and formats, Opus is reliable in business logic, legal language, and technical specification generation.

High Safety and Alignment

With Anthropic’s Constitutional AI, Opus avoids hallucinations, respects boundaries, and produces grounded outputs suitable for enterprise use.

Multilingual Mastery

Supports 25+ languages with high fluency, enabling global deployments and multilingual AI applications without loss of precision.

Use Cases for Claude 4 Opus

AI Copilots and Knowledge Workers

Deploy Claude 4 Opus for internal agents that assist with research, codebase understanding, document comparison, or data analysis.

Enterprise Document Processing

Ingest and summarize entire financial reports, contracts, compliance documents, and knowledge bases using Opus’s long-context comprehension.

Legal and Regulatory Generation

Draft structured legal text, audit reports, policy frameworks, and other high-trust documents with Opus’s strong formatting and alignment.

Scientific and Technical Research

Use Opus to explore, summarize, and reason over academic papers, patents, or multi-source scientific corpora.

Multilingual Enterprise Interfaces

Power global AI systems with localized content generation, multilingual chat interfaces, and translation-aware automation.

Why Use Claude 4 Opus via AnyAPI.ai

Unified LLM API Platform

Access Claude 4 Opus, GPT, Gemini, and Mistral models via a single API and SDK stack. No need to manage vendor-specific integrations.

No Anthropic Setup Required

Start using Claude 4 Opus without setting up an Anthropic account or worrying about access approval.

Usage-Based Access

Pay only for what you use, whether for R&D experiments or scaled enterprise deployments. No quotas or overage surprises.

Production-Grade Infrastructure

AnyAPI.ai ensures uptime, throughput, analytics, and logging built for enterprise AI apps.

Superior to OpenRouter and AIMLAPI

Get faster provisioning, smarter routing, and full observability across all models—not just Claude.

Technical Specifications

- Context Window: 200,000–1,000,000 tokens

- Latency: Moderate (optimized for high-accuracy outputs)

- Supported Languages: 25+

- Release Year: 2024 (Q2)

- Integrations: REST API, Python SDK, JavaScript SDK, Postman

Integrate Claude 4 Opus via AnyAPI.ai for Maximum LLM Performance

Claude 4 Opus is the most powerful Claude model for developers and enterprises requiring depth, precision, and safety in large-scale AI tools.

Access Claude 4 Opus via AnyAPI.ai and deploy intelligent systems at scale.

Sign up, get your API key, and build with confidence.