Balanced Open-Weight LLM for Scalable, Real-Time AI Applications

Mistral Medium 3.1 is a mid-sized, open-weight large language model created by Mistral AI. It strikes a balance between performance, cost, and speed. This model fits well between the lightweight Mistral Tiny and the powerful Mistral Large. It’s perfect for startups, developers, and companies that want to add reliable LLM features to their production environments without wasting resources.

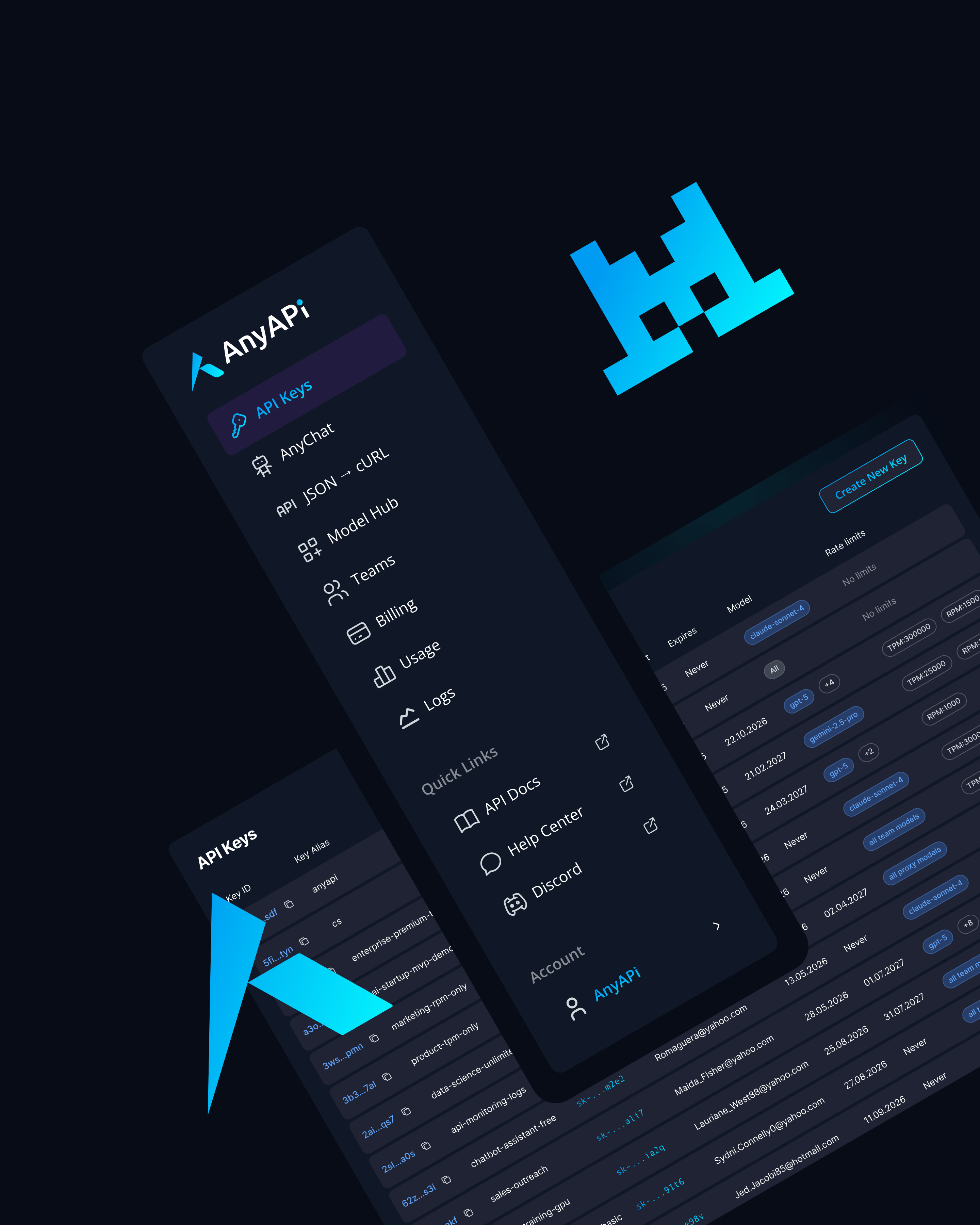

Now available through AnyAPI.ai, Mistral Medium 3.1 provides an easy way for developers to integrate APIs, allowing them to experiment, deploy, and scale apps in various fields.

Key Features of Mistral Medium 3.1

Efficient Performance

Provides a balance between speed and accuracy, enabling smooth deployment in real-time applications.

Strong Reasoning and Alignment

Trained with advanced alignment techniques to ensure reliable outputs in enterprise and SaaS environments.

Coding and Automation Skills

Performs well in code completion, debugging, and scripting for dev tools and automation systems.

Open-Weight Flexibility

Available under a permissive license for both API access and self-hosted deployments.

Use Cases for Mistral Medium 3.1

Customer Support Chatbots

Deploy efficient, reliable chat assistants that balance cost and performance.

Code Assistance and IDE Integration

Embed into dev environments for lightweight but capable coding copilots.

Document Summarization and Processing

Summarize legal, financial, and research documents at scale.

Workflow Automation

Automate reporting, CRM updates, and operational tasks with structured outputs.

Knowledge Base Search and RAG

Combine with vector databases to enable contextual enterprise knowledge retrieval.

Why Use Mistral Medium 3.1 via AnyAPI.ai

Unified Access

Connect to Mistral Medium alongside Claude, GPT, Gemini, and DeepSeek models with one API.

Usage-Based Billing

Transparent, pay-as-you-go pricing with no long-term vendor lock-in.

Developer Experience

REST, Python, and JS SDKs with monitoring and analytics built in.

Production Reliability

High uptime, scalable infrastructure, and better provisioning than OpenRouter or HF endpoints.

Flexibility for Open-Source + Hosted Models

Choose between managed API access and self-hosted deployments.

Start Building with Mistral Medium 3.1 Today

Mistral Medium 3.1 provides a good balance of speed, accuracy, and cost efficiency. This makes it a great choice for production-ready applications. You can integrate Mistral Medium 3.1 through AnyAPI.ai today. Get your API key and start building scalable AI solutions within minutes.