Open-Weight, High-Performance LLM API for Flexible AI Deployments

Mistral Large is a powerful open-weight large language model developed by Mistral AI, offering competitive reasoning and generation performance while maintaining deployment flexibility and transparency. As the flagship model in Mistral’s suite, it is designed for developers, AI engineers, and infrastructure teams building custom LLM solutions or seeking cost-efficient, self-hosted alternatives to proprietary models.

Available via API and for local deployment, Mistral Large is suited for regulated industries, edge AI systems, enterprise RAG pipelines, and applications that demand full control over the model stack.

Key Features of Mistral Large

Open-Weight Model Access

Mistral Large can be self-hosted or used via managed APIs, offering complete flexibility and control over deployment, fine-tuning, and customization.

Strong Reasoning and Code Capabilities

Trained with a focus on performance and usability, Mistral Large handles logic, math, and structured tasks efficiently, while supporting robust code generation and explanation.

128k Token Context Window

With 128,000 tokens of context, the model can handle multi-document queries, long sessions, and data-rich prompts without truncation.

High-Speed Performance

Engineered for efficiency, Mistral Large delivers low-latency responses, making it suitable for real-time chat, embedded AI tools, and dynamic workflows.

Multilingual Understanding

Supports 20+ languages, enabling global integration into multilingual applications, search interfaces, and content pipelines.

Use Cases for Mistral Large

RAG and Knowledge Retrieval Systems

Pair Mistral Large with vector databases for robust search-augmented generation (RAG), knowledge agents, and internal Q&A tools.

Developer-Facing Code Assistants

Generate, review, and explain code across common languages. Great for self-hosted IDE integrations or secure enterprise environments.

Legal and Business Document Processing

Summarize, translate, and analyze documents such as contracts, reports, and policies without reliance on external APIs.

Enterprise-Grade SaaS Features

Deploy AI features inside SaaS tools where vendor lock-in and external data sharing are concerns.

Customizable Internal LLM Apps

Fine-tune or prompt-engineer Mistral Large to support support teams, operational workflows, or domain-specific chat interfaces.

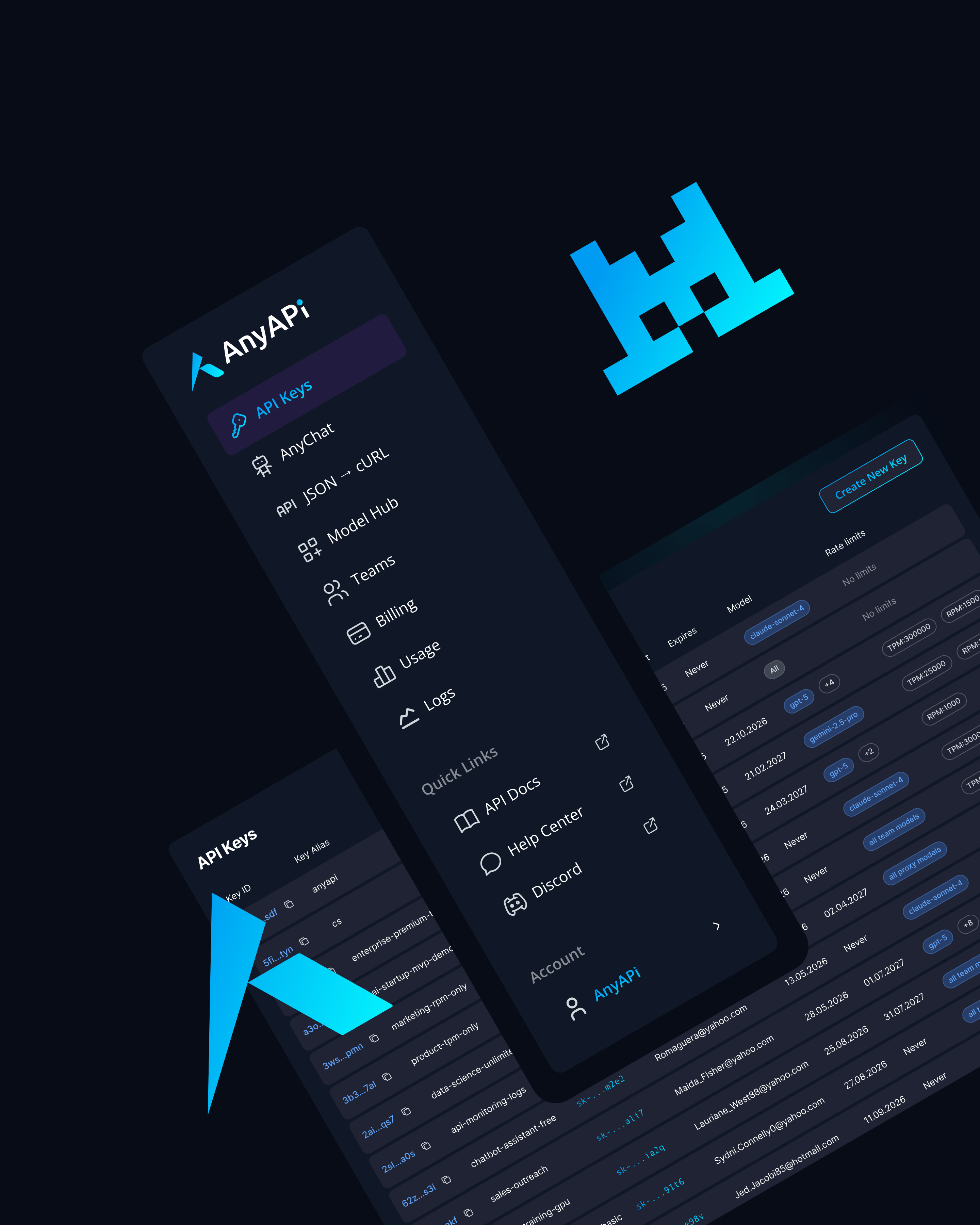

Why Use Mistral Large via AnyAPI.ai

API Access to Open-Weight Models

Enjoy the best of both worlds: open-weight model performance with managed infrastructure and instant deployment.

Unified Platform with Multi-Model Support

Query Mistral Large alongside Claude, GPT, and Gemini models using a single SDK or REST endpoint.

No Vendor Lock-In

Maintain data ownership and avoid cloud dependency while still benefiting from usage-based API access.

Flexible Pricing and High Throughput

Use Mistral Large cost-effectively in batch jobs, dev tools, and real-time inference without worrying about platform throttling.

Better Than OpenRouter or AIMLAPI

AnyAPI.ai ensures higher availability, unified logs, scalable provisioning, and model orchestration across teams.

Use Mistral Large via AnyAPI.ai or Self-Hosting

Mistral Large combines performance, flexibility, and cost efficiency—perfect for teams building advanced AI tools with full stack control.

Access Mistral Large via AnyAPI.ai and deploy open-weight LLMs at scale today.

Get started instantly with your API key or run it locally for maximum flexibility.