Lightweight, Open-Weight LLM for Fast, Scalable API Applications

Mistral Medium is a lightweight open-weight large language model developed by Mistral AI, designed for high-speed, cost-efficient performance across a range of practical AI tasks. With support for 32k tokens and rapid inference times, it is well-suited for developers and teams building responsive, low-latency AI features into applications where affordability and flexibility matter.

As a middle-tier model in Mistral’s open-weight lineup, Mistral Medium balances reasoning strength with speed and is ideal for on-device inference, serverless AI, and scalable API integrations.

Key Features of Mistral Medium

Open-Weight Access

Mistral Medium is fully open-weight and can be self-hosted or accessed via managed APIs—giving developers complete control over deployment and customization.

Fast Inference and Low Latency

The model is optimized for real-time responsiveness, delivering sub-300ms results for short prompts and maintaining throughput under high-load conditions.

32k Token Context Window

Mistral Medium supports up to 32,000 tokens, making it effective for chat memory, document-level reasoning, and multi-input prompts.

Strong Performance in Text and Code

Capable of solid reasoning, summarization, and code completion tasks across multiple programming languages with good efficiency.

Multilingual Capabilities

Supports 20+ languages, enabling international application development and localized content generation.

Use Cases for Mistral Medium

Customer-Facing AI Chatbots

Deploy fast, low-cost chatbots for ecommerce, onboarding, and support flows that require quick and aligned responses.

Embedded and Serverless AI Tools

Use Mistral Medium in edge AI scenarios or serverless architectures where resource usage and latency are critical.

SaaS Product Features

Integrate into content tools, writing assistants, or analytics dashboards with reliable generation at low overhead.

Document Summarization and Note-Taking

Process and condense product specs, internal docs, or meeting transcripts using Mistral’s efficient long-context window.

Developer Utilities and Coding Help

Offer in-editor code suggestions, explanations, and logic completion in environments where fast response is essential.

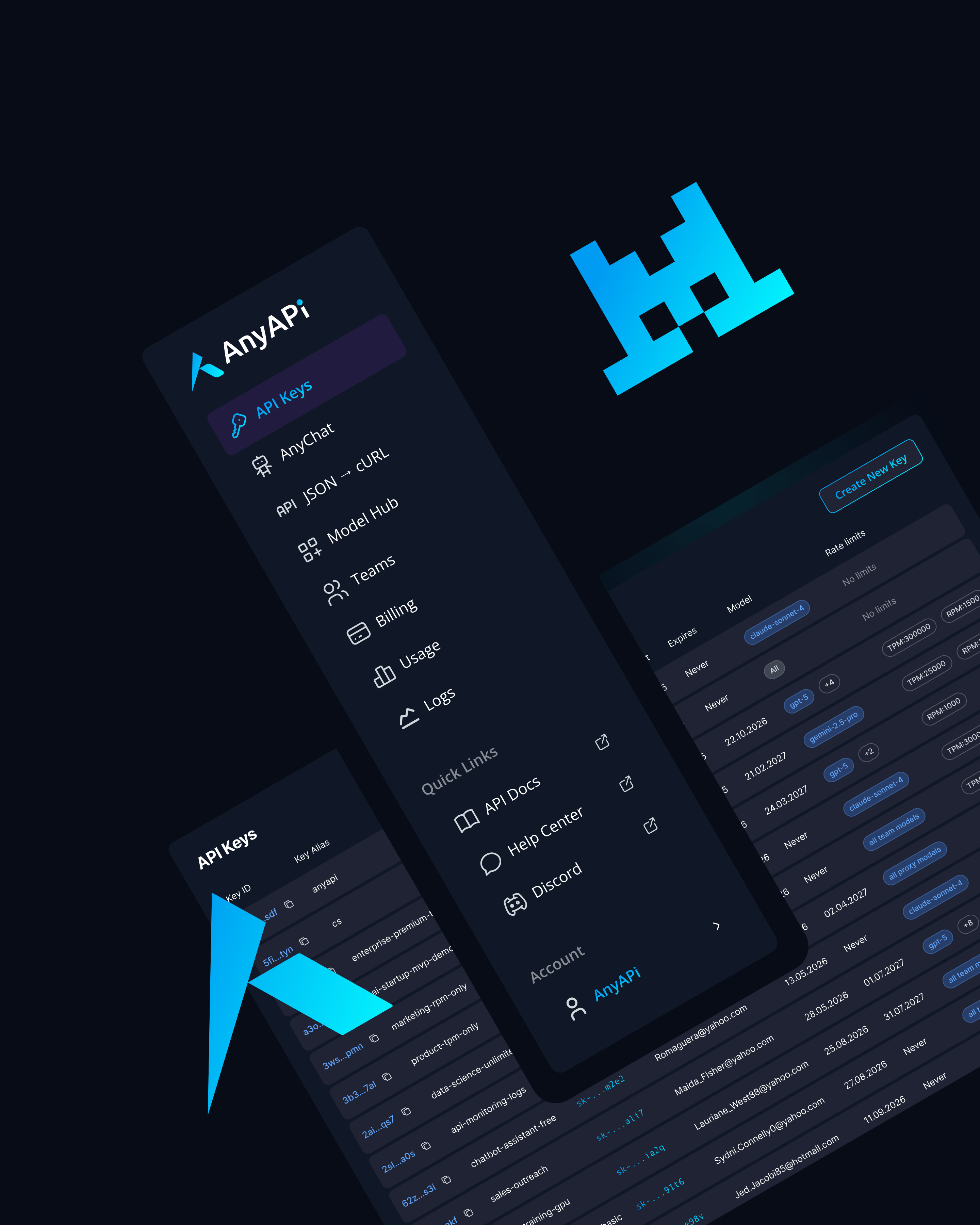

Why Use Mistral Medium via AnyAPI.ai

Unified LLM Access

Use Mistral Medium alongside GPT, Claude, and Gemini with a single API. Ideal for testing, comparison, and switching between models.

No Hosting Required

Skip infrastructure setup. Access open-weight models via API without managing your own inference stack.

Usage-Based Billing

Perfect for agile teams—scale with demand and only pay for what you use.

Developer Tools and Analytics

Gain full visibility into usage with built-in logs, token tracking, and performance insights.

Superior to OpenRouter or AIMLAPI

AnyAPI.ai delivers stronger observability, faster provisioning, and better multi-model orchestration.

Use Mistral Medium AnyAPI.ai API for Fast, Flexible LLM Apps

Mistral Medium provides an optimal balance of speed, affordability, and open access—perfect for scaling practical AI features quickly.

Integrate Mistral Medium via AnyAPI.ai and deliver fast, efficient LLM experiences today.

Sign up, get your API key, and start building in minutes.