Lightning-Fast API Access to a Lightweight, Scalable LLM

Claude 3.5 Haiku is a lightweight, high-performance large language model developed by Anthropic, optimized for real-time applications and low-latency environments. As the most efficient model in the Claude 3.5 family, Haiku is engineered for speed, affordability, and alignment—making it ideal for production AI tools, responsive chat interfaces, and scalable backend services.

Positioned as the smallest member of Anthropic’s flagship Claude 3.5 lineup (alongside Sonnet and Opus), Haiku balances fast inference with solid reasoning skills. It is designed for teams that need rapid responses, cost-effective deployments, and easy integration across a variety of applications—without sacrificing safety or reliability.

Whether you’re building internal tools, customer-facing AI products, or embedding generative intelligence into your stack, Claude 3.5 Haiku offers powerful capabilities through a lightweight interface.

Key Features of Claude 3.5 Haiku

Ultra-Low Latency

Claude 3.5 Haiku is designed for millisecond-scale response times, making it ideal for interactive UIs, real-time chat, and streaming responses. It delivers significantly lower latency than larger models like Claude Opus or GPT-4 Turbo.

Efficient Context Handling

With a context window of up to 200,000 tokens, Claude 3.5 Haiku can process and reason over long documents or conversations, supporting summarization, search, and code analysis at scale.

High Alignment and Safety

Anthropic’s Constitutional AI framework ensures that Claude 3.5 Haiku maintains strong alignment, making it safe for use in customer support, education, and regulated industries.

Multilingual Understanding

Haiku supports over 30 major languages, including English, Spanish, French, German, Japanese, Korean, and Mandarin, making it suitable for global apps and multilingual knowledge systems.

Developer-Friendly Integration

Claude 3.5 Haiku is production-ready, available through REST APIs and SDKs with excellent uptime and observability. It integrates seamlessly with existing AI stacks and workflows.

Use Cases for Claude 3.5 Haiku

Real-Time Chatbots for SaaS and Support

With fast responses and strong language understanding, Claude 3.5 Haiku powers chat interfaces for customer support, onboarding bots, and SaaS copilots without slowing down the user experience.

Code Generation in Lightweight IDE Extensions

While not optimized for deep code reasoning like Claude Opus, Haiku supports useful autocompletion, documentation drafting, and bug description in IDEs or no-code tools where speed matters.

Rapid Document Summarization for Legal and Research Teams

Use Claude 3.5 Haiku to quickly summarize legal agreements, research papers, and lengthy internal documents, delivering instant insights without heavy compute loads.

Workflow Automation Across Internal Ops and CRM Systems

Integrate Claude 3.5 Haiku into internal dashboards, CRMs, or productivity tools to automate note-taking, ticket summarization, or content classification in real time.

Enterprise Knowledge Base Search

Enable contextual search and document Q&A in corporate knowledge systems, internal wikis, or HR platforms—Haiku processes large input contexts while keeping latency low.

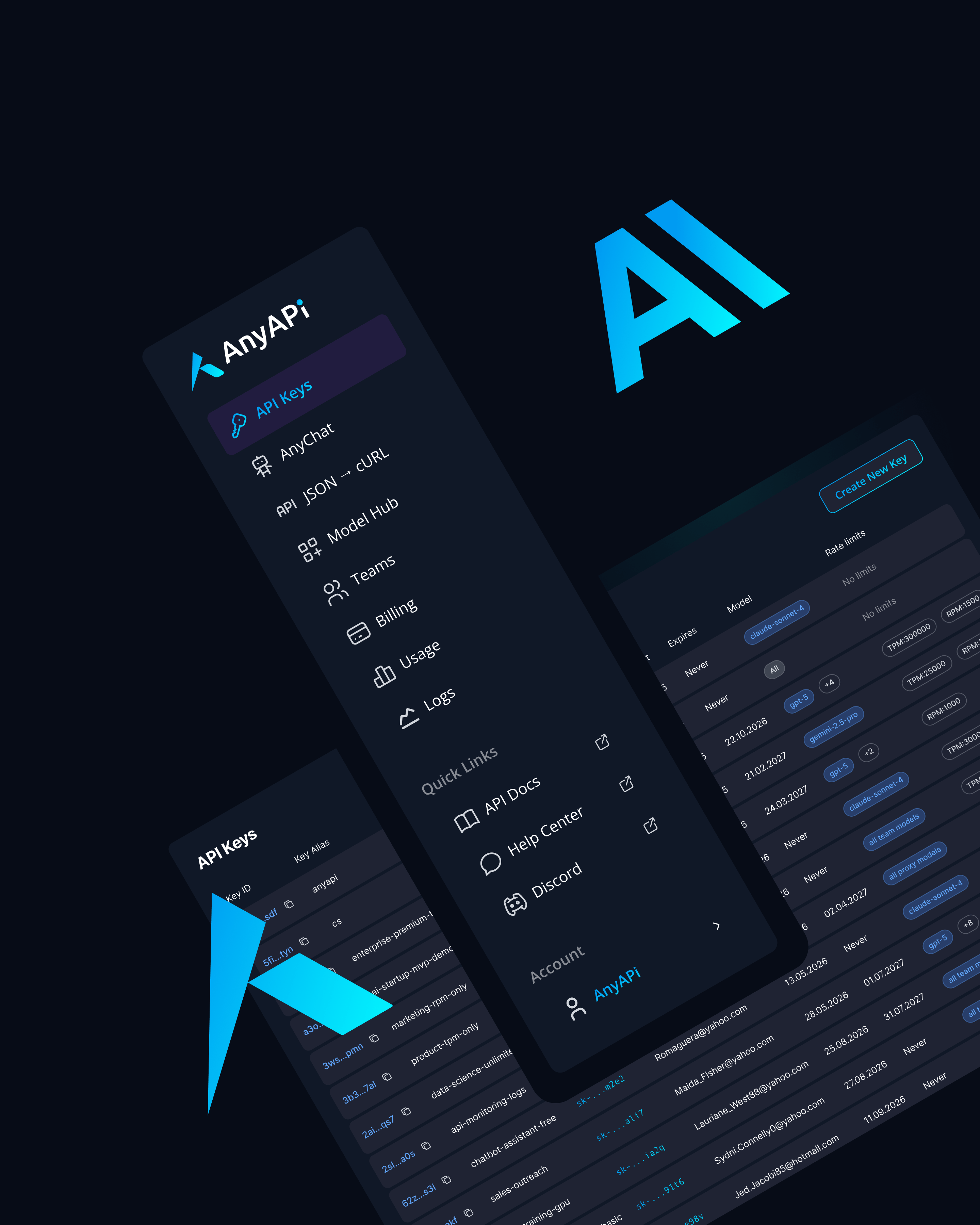

Why Use Claude 3.5 Haiku via AnyAPI.ai

Unified Access Across LLMs

Deploy Claude 3.5 Haiku along with GPT, Gemini, and Mistral models via one consistent API—ideal for experimentation and production scaling.

No Anthropic Account Needed

Start instantly via AnyAPI.ai—no credential provisioning, quotas, or custom access pipelines.

Usage-Based Pricing

Only pay for what you use. Haiku’s affordability makes it ideal for batch jobs, UI integration, and high-frequency apps.

Production-Grade Stability

Get predictable uptime, error tracking, and analytics for live apps and managed services.

Superior to OpenRouter or AIMLAPI

Benefit from AnyAPI.ai’s provisioning guarantees, multi-model orchestration, and detailed usage monitoring.

Technical Specifications

- Context Window: 200,000 tokens

- Latency: ~150–200ms on standard prompts

- Supported Languages: 20+

- Release Year: 2024 (Q2)

- Integrations: REST API, Python SDK, JS SDK, Postman

Integrate Claude 3.5 Haiku via AnyAPI.ai and start building today.

Whether you’re a developer building chatbots, a startup scaling SaaS tools, or a team integrating LLMs into internal workflows, Claude 3.5 Haiku delivers speed, affordability, and safety at scale.

Sign up, get your API key, and launch in minutes.