A New Era in Scalable, Real-Time LLM API Access

GLM 4.1V 9B Thinking is a game-changing large language model built to streamline the workflow of developers, startups, and data infrastructure teams. Created by the innovators at THUDM, this versatile model shines as a mid-tier solution, delivering impressive capabilities that bridge the gap between lightweight models and full-scale flagship systems.

With its emphasis on production-readiness and suitability for real-time apps, it's an excellent pick for generative AI systems seeking the sweet spot between performance and cost. GLM 4.1V 9B Thinking proves to be the perfect tool for building LLM-integrated solutions, especially for teams that need speed and efficiency in their AI workflows.

Thanks to its solid API capabilities and flexible integration options, it becomes a powerful partner in scaling AI-based products.

Why Use GLM 4.1V 9B Thinking via AnyAPI.ai

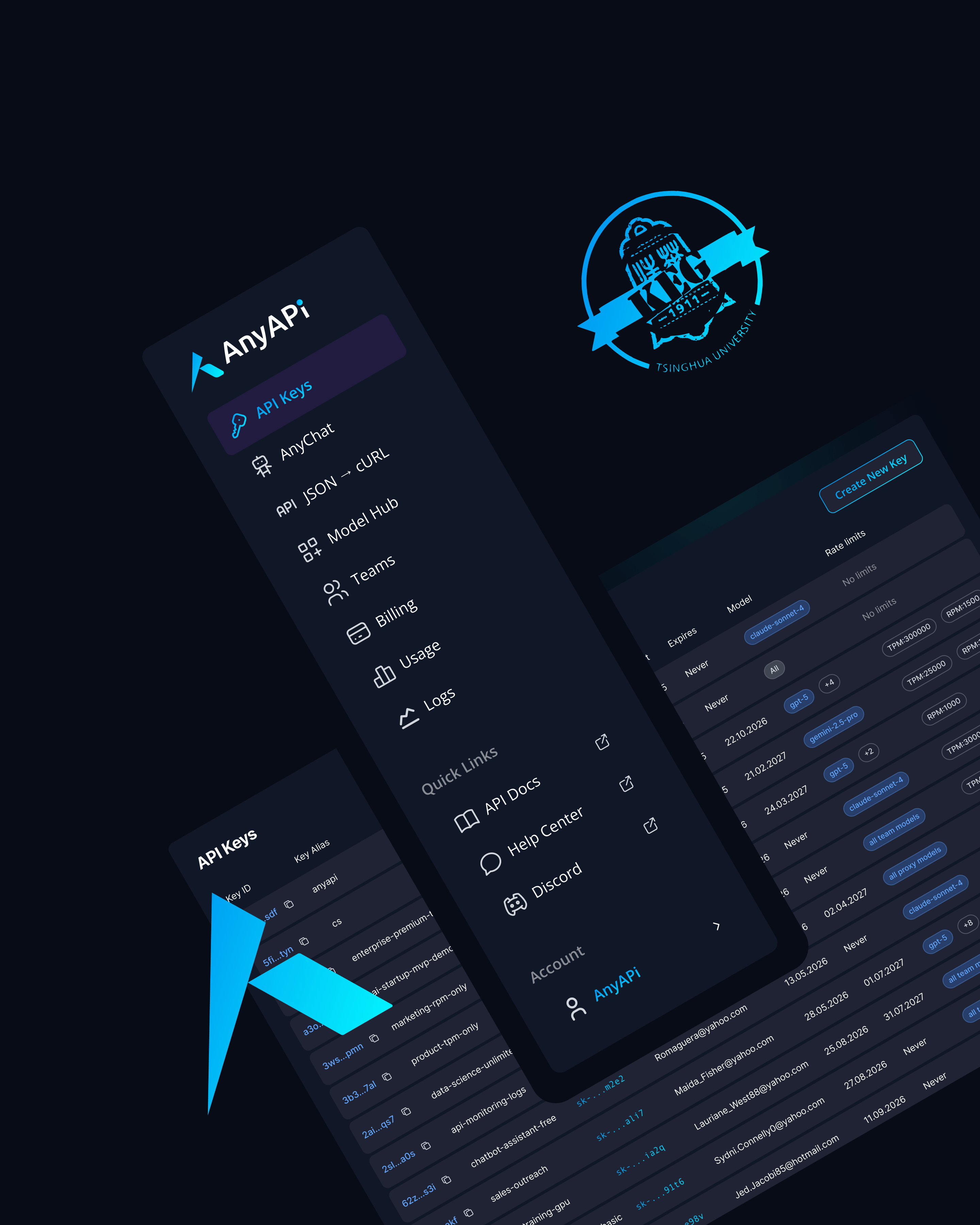

AnyAPI.ai takes the model's value to the next level through a unified platform that provides API access to multiple models, including THUDM: GLM 4.1V 9B Thinking. This integration structure delivers:

- Seamless API Access: A unified API for multiple models makes integration processes straightforward and intuitive.

- Hassle-Free Onboarding: With one-click onboarding, developers enjoy freedom from vendor lock-in, giving you flexibility and control over your projects.

- Dynamic Billing Options: Usage-based billing keeps costs down by ensuring you only pay for what you actually use, promoting smart economics.

- Robust Developer Tools: AnyAPI.ai offers developer tools and production-grade infrastructure, making AI task execution smooth and reliable.

- Differentiation from Competitors: Unique features like superior provisioning and better unified access set it apart from other platforms like OpenRouter and AIMLAPI.

Start Using GLM 4.1V 9B Thinking via API Today

For startups, developers, and technology teams looking for an advanced yet budget-friendly large language model, integrating 'THUDM: GLM 4.1V 9B Thinking' via AnyAPI.ai is a smart strategic move.

Sign up, take your API key, and get started in minutes.

Boost your AI capabilities and join the growing community making real progress with this remarkable technology.