Reliable, Scalable, and Real-Time: The Magistral Medium 2506 (thinking) API for Effortless LLM Integration

Magistral Medium 2506 (thinking) is a robust, thoughtfully engineered language model developed to simplify the construction of intelligent applications. Designed by the innovators at Mistral, this model occupies a vital mid-tier position within the Mistral family, offering developers a unique blend of power and flexibility without the overhead of top-tier commitment.

Magistral Medium 2506 (thinking) is perfect for production use, supporting real-time applications and a wide range of generative AI systems. It serves as a reliable tool for startups scaling AI-based products, particularly useful for developers focusing on LLM-integrated tools and software solutions.

Key Features of Magistral Medium 2506 (thinking)

Latency and Real-Time Readiness

Developers will appreciate the model's minimal latency, ensuring swift interactions suitable for high-demand environments and real-time applications.

Extended Context Size

Magistral Medium 2506 (thinking) supports an expansive context window, enabling in-depth analysis, better understanding, and precise responses across complex queries.

Alignment and Safety

Safety protocols are a priority, with alignment features optimizing the model to recognize and filter biased content, ensuring responsible AI integration.

Language Support

With multilingual capabilities, Magistral Medium 2506 (thinking) handles diverse languages, facilitating global outreach and interaction.

Developer Experience

The model is built for intuitive deployment across various platforms, ensuring a seamless developer experience without the hassles of complex integrations.

Use Cases for Magistral Medium 2506 (thinking)

Chatbots for SaaS and Customer Support

The model excels in implementing intelligent chatbots for software-as-a-service platforms and enhancing customer support with natural, context-aware interactions.

Code Generation for IDEs and AI Development Tools

Harness the model's coding capabilities to improve development tools, automate code generation, and streamline IDE processes.

Document Summarization in Legal Tech and Research

Reduce hours of intensive reading with powerful document summarization, suitable for legal technology firms and academic research endeavors.

Workflow Automation for Internal Ops and CRM

Enhance internal efficiencies and customer relationship management through automated workflows that ensure accuracy and save valuable time.

Knowledge Base Search for Enterprise Data and Onboarding

Streamline the search process across extensive knowledge bases, making information retrieval faster and more effective for enterprises and onboarding sessions.

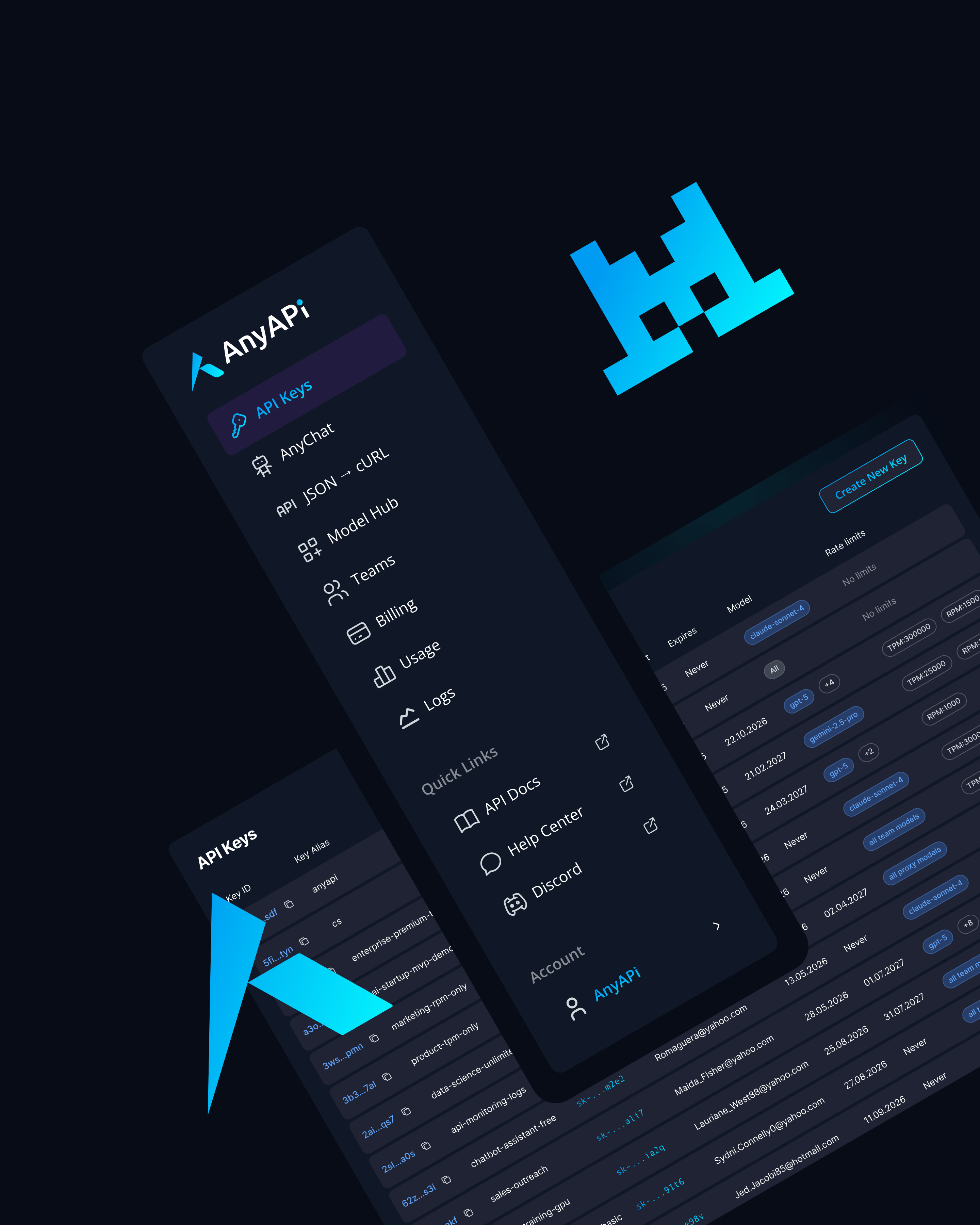

Why Use Magistral Medium 2506 (thinking) via AnyAPI.ai

AnyAPI.ai significantly enhances the experience of accessing Magistral Medium 2506 (thinking).

With a unified API across multiple LLMs, developers can quickly onboard with a single click, avoiding vendor lock-in. Enjoy usage-based billing and a plethora of developer tools backed by production-grade infrastructure, distinguishing AnyAPI.ai from other services like OpenRouter and AIMLAPI.

Start Using Magistral Medium 2506 (thinking) via API Today

Empower your projects with the Magistral Medium 2506 (thinking) model via AnyAPI.ai. Its ability to scale, its real-time readiness, and seamless integration make it an essential tool for developers, startups, and teams.

Sign up, get your API key, and integrate 'Mistral: Magistral Medium 2506 (thinking)' to elevate your applications today.