Open-Source Flagship LLM for Reasoning, Coding, and RAG via API

DeepSeek V3 is the latest open-weight flagship model from DeepSeek, designed to compete with top-tier closed LLMs like GPT-4 and Claude Opus in both reasoning and code generation. Released under the MIT license, DeepSeek V3 is trained on 10T+ high-quality tokens and evaluated to perform at Claude 3 Sonnet or GPT-4 Turbo levels for many tasks.

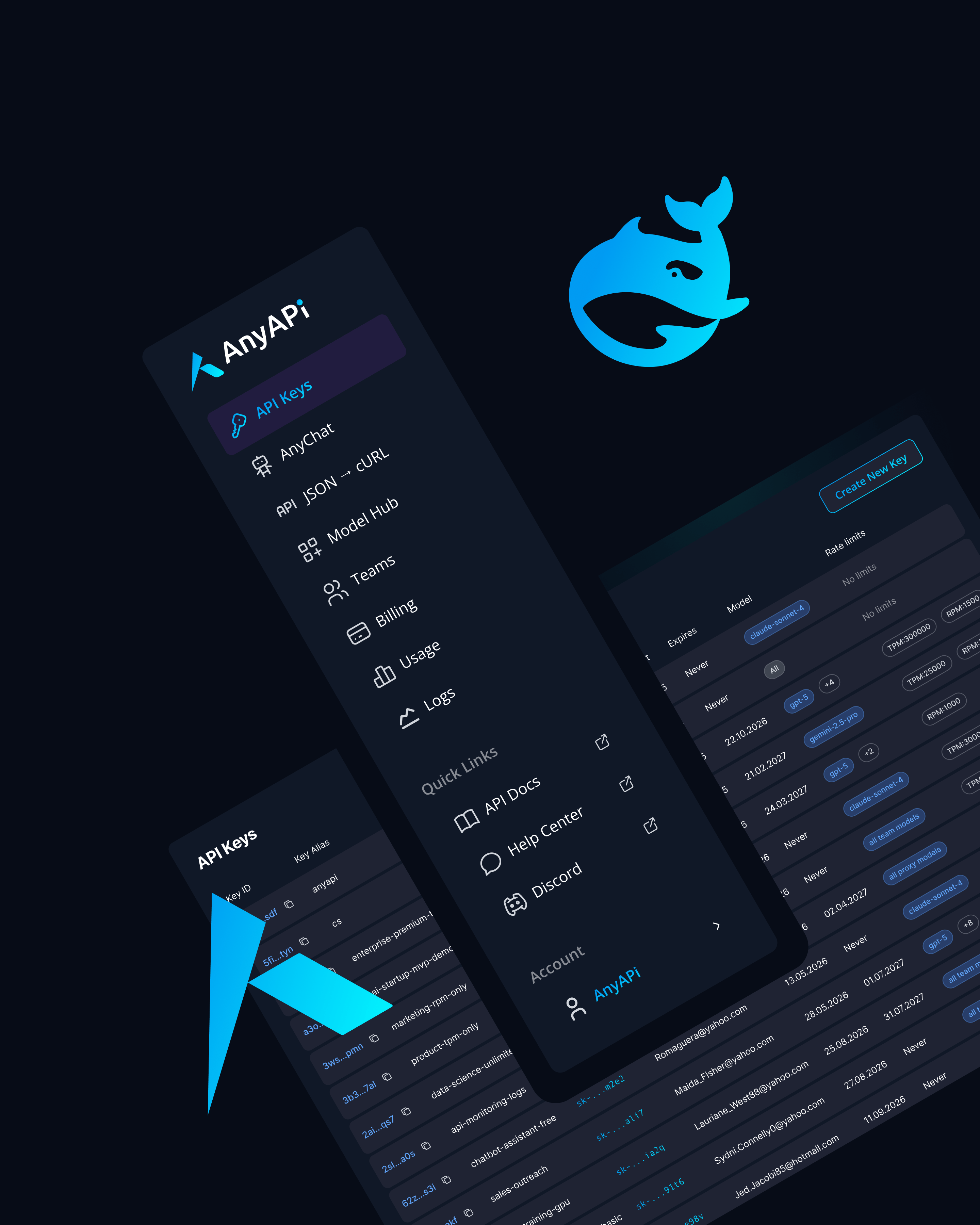

Available now via AnyAPI.ai, DeepSeek V3 gives developers and AI teams access to a high-performance, open model through API endpoints, making it ideal for enterprise-scale tools, autonomous agents, and hybrid search applications.

Key Features of DeepSeek V3

MIT Open License with Commercial Rights

Run, host, and modify the model freely for production use—locally or via the cloud.

Top-Tier Reasoning and Coding Performance

Outperforms GPT-3.5 Turbo and rivals GPT-4 on math, code generation, and multi-turn tasks.

Multilingual and Alignment-Aware

Supports fluent interaction in multiple languages with strong instruction-following ability.

Built for Scalable API and Local Use

Whether you want to access it via API, Hugging Face, or bare metal—DeepSeek V3 is deployment-ready.

Use Cases for DeepSeek V3

Code Copilots and IDE Integration

Build intelligent developer assistants for autocompletion, documentation, and error explanation.

Retrieval-Augmented Generation (RAG)

Combine V3 with vector databases and grounding sources for accurate, context-aware answers.

Autonomous Agents and Planners

Power task solvers, multi-agent systems, and product workflow automation with reliable reasoning.

Enterprise NLP Tools

Use DeepSeek V3 for classification, summarization, entity recognition, or domain-specific QA.

Secure On-Premise AI Deployment

Compliant with open-weight and privacy mandates in regulated industries.

Why Use DeepSeek V3 via AnyAPI.ai

No Setup or Infrastructure Required

Skip model weights and container deployment—access DeepSeek V3 with a single API call.

Unified SDK for All Models

Integrate DeepSeek V3 alongside GPT-4, Claude, Gemini, and Mistral with one API key.

Cost-Optimized for Frequent Use

Get premium model performance without premium pricing.

Better Latency and Stability Than HF Inference or OpenRouter

Production-tuned endpoints ensure consistent availability.

Full Analytics and Logging

Track prompt history, token usage, and performance metrics in real-time.

Build AI Products with DeepSeek V3 and Full Control

DeepSeek V3 is one of the most powerful open-weight models available - ideal for teams needing transparency, performance, and flexibility.

Start building with DeepSeek V3 via AnyAPI.ai - scale up reasoning, coding, and intelligent agents with no setup required.