Open-Weight Reasoning LLM for API-Based RAG, Agents, and Local AI Deployment

DeepSeek R1 is the first open-weight large language model developed by DeepSeek, designed to rival proprietary LLMs in reasoning, coding, and research applications. Trained with 10T+ high-quality tokens and released under the permissive MIT license, R1 is optimized for retrieval-augmented generation (RAG), developer tools, and local inference use cases.

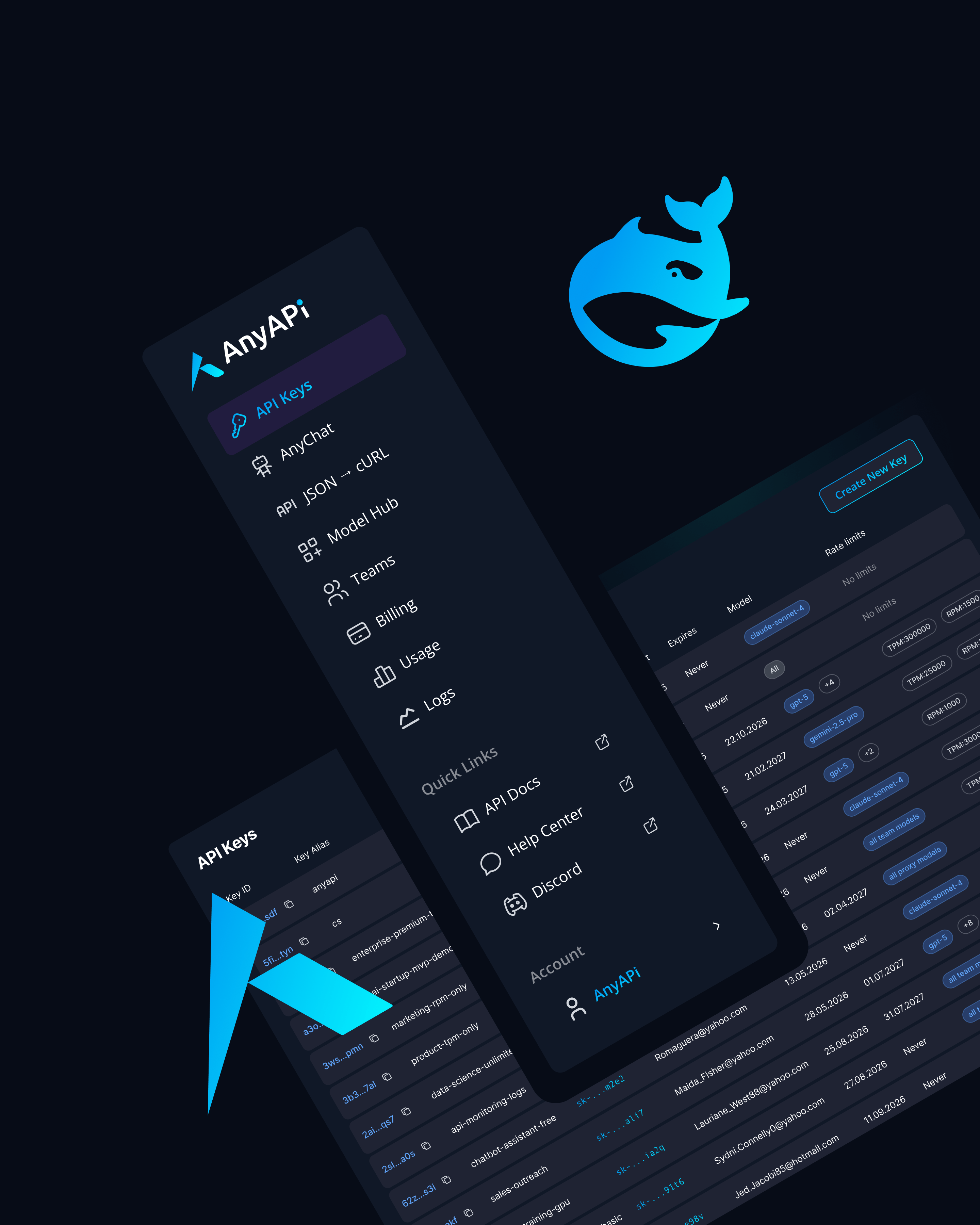

Now available via AnyAPI.ai, DeepSeek R1 gives developers and ML teams full access to advanced generative AI capabilities - without vendor lock-in, expensive tokens, or closed black-box architectures.

Key Features of DeepSeek R1

MIT-Licensed Open Weights

Freely deploy, fine-tune, and distribute with full commercial rights and self-hosting capability.

Strong Reasoning and Coding Performance

Benchmarks show R1 approaching or exceeding GPT-3.5 Turbo in math, logic, and code generation.

Multilingual Capability

Supports English and several major languages for global application scenarios.

Fast Inference with Customizable Runtime

Runs on both GPU and CPU setups using optimized weights; can be accessed instantly via AnyAPI.ai API.

Use Cases for DeepSeek R1

Retrieval-Augmented Generation (RAG)

Combine R1 with vector stores to power intelligent Q&A, support bots, and document agents.

Coding Copilots and Scripting Tools

Use R1 to suggest functions, refactor code, or create bash/Python scripts in development environments.

Internal QA and Knowledge Management

Deploy in enterprise search systems to parse long manuals, policy docs, or compliance databases.

Offline and Private AI Assistants

Deploy DeepSeek R1 in fully private or air-gapped systems, including national cloud or edge compute.

Multilingual Document Summarization

Summarize, translate, or annotate content in English, Chinese, and other supported languages.

Why Use DeepSeek R1 via AnyAPI.ai

No Hosting Required

Skip infrastructure setup—get instant API access to DeepSeek R1 with scalable endpoints.

Unified API for Multiple Models

Integrate DeepSeek R1 alongside GPT-4o, Claude, Gemini, and Mistral in one platform.

Flexible Usage Billing

Pay-as-you-go pricing with detailed usage metrics and team-level controls.

Production-Ready Infrastructure

Monitor token counts, latency, and throughput from a real-time dashboard.

Better Support and Reliability than OpenRouter

Access tuned environments with consistent uptime and premium provisioning.

Use DeepSeek R1 for Transparent, High-Performance AI

DeepSeek R1 combines open-source access, strong reasoning performance, and fast inference - ideal for RAG, internal AI agents, and coding copilots.

Start using DeepSeek R1 via AnyAPI.ai - no setup, full control, real-time speed.

Sign up, get your key, and start building today.