Qwen’s Open-Weight LLM for Reasoning, Coding, and Research

QwQ 32B Preview is Qwen’s experimental large language model, designed to showcase advanced reasoning, coding, and natural language generation capabilities in a compact but powerful 32B parameter model. As part of Qwen’s ongoing open-weight initiative, QwQ 32B Preview gives developers early access to cutting-edge performance while maintaining transparency and deployment flexibility.

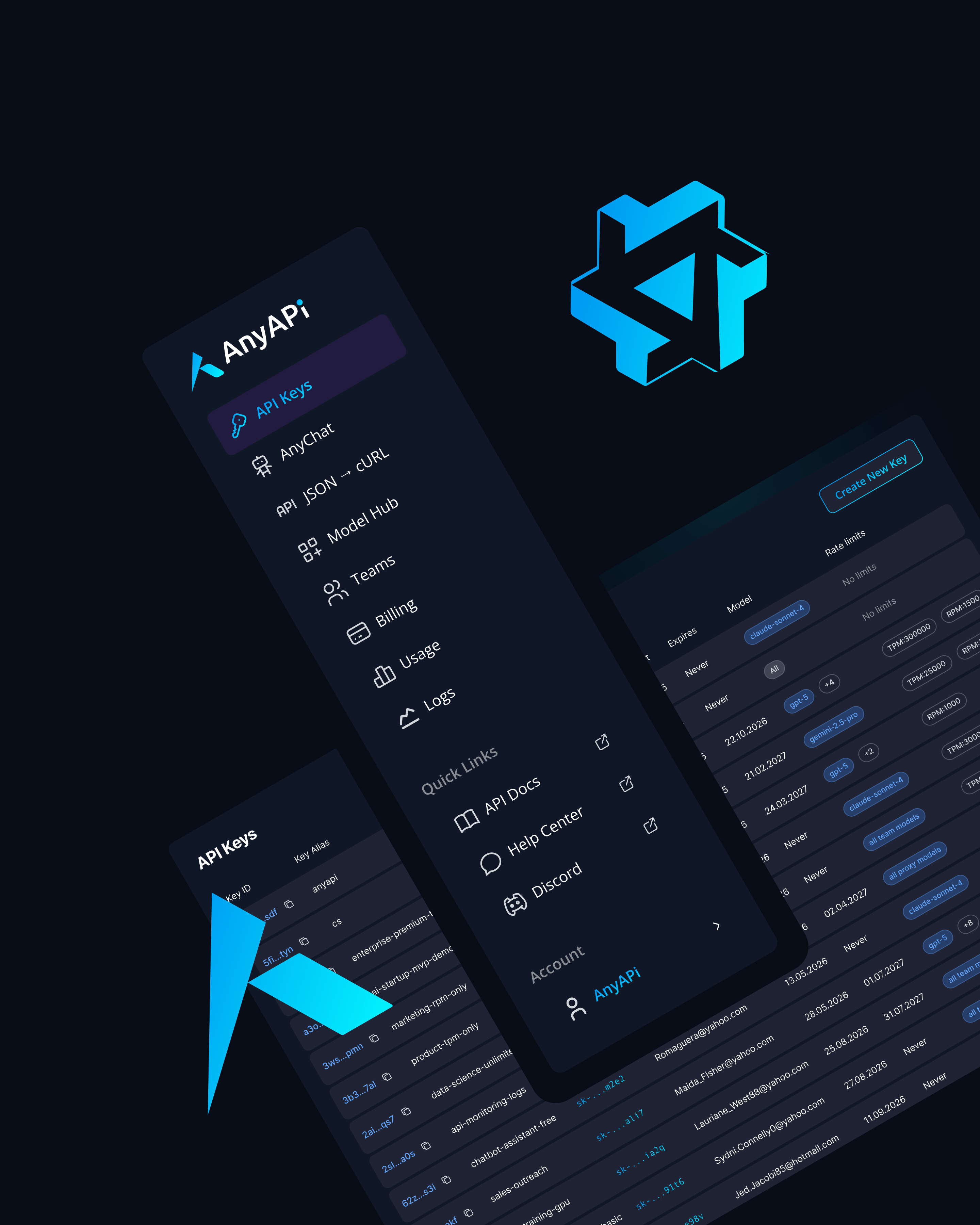

Available via AnyAPI.ai, QwQ 32B Preview can be integrated into production environments instantly—without requiring direct setup, GPU provisioning, or vendor lock-in.