Anthropic’s Balanced Multimodal LLM for Real-Time, Scalable API Integration

Claude 3.7 Sonnet is Anthropic’s mid-tier, production-grade LLM designed to balance speed, cost, and reasoning quality. Positioned between Claude 3.7 Haiku and Claude 3.7 Opus, Sonnet offers strong performance for both text-only and multimodal workloads, making it a top choice for developers building real-time SaaS applications, chatbots, and content automation systems.

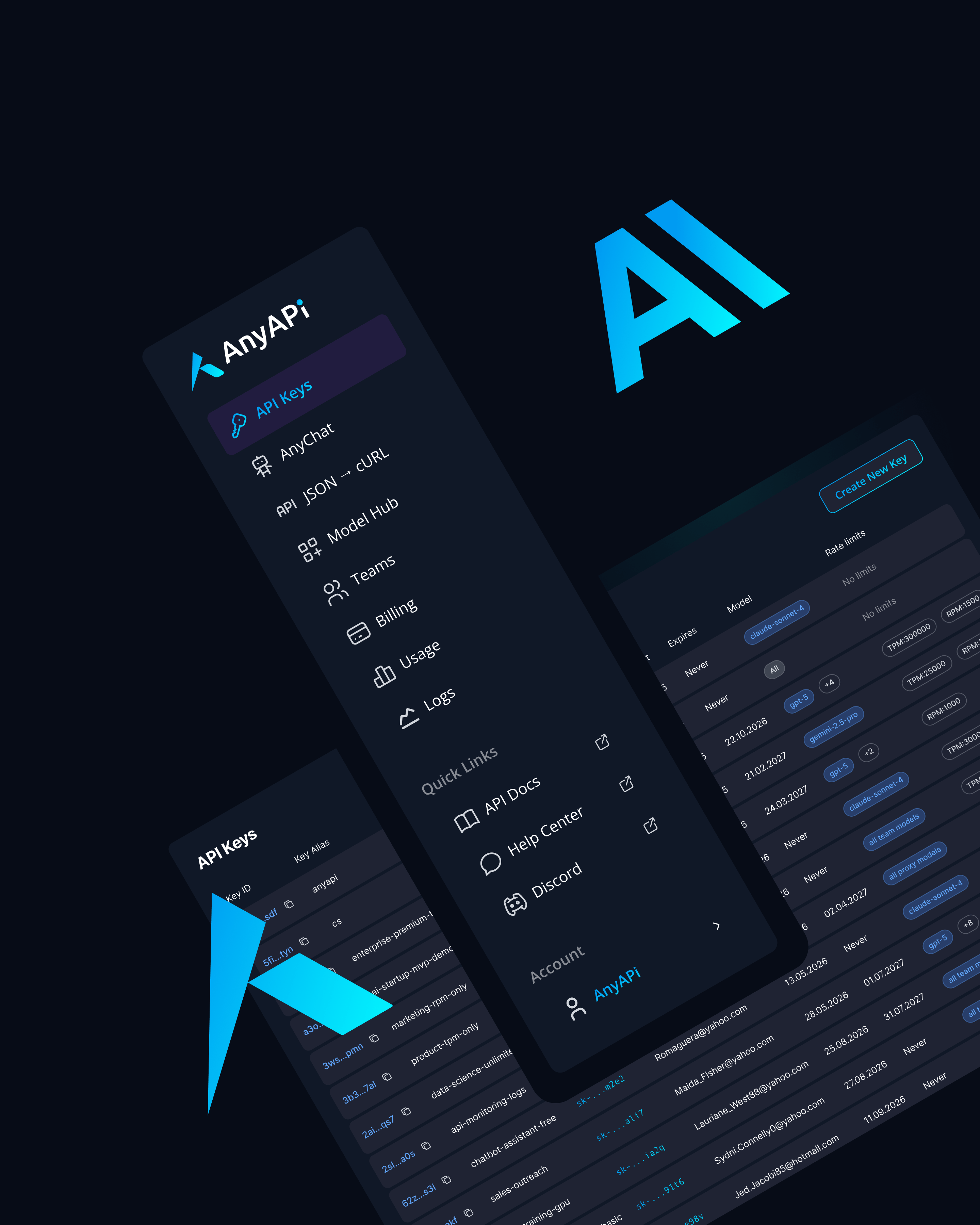

Available via AnyAPI.ai, Claude 3.7 Sonnet delivers low-latency, high-accuracy outputs with extended context capabilities, ideal for customer-facing tools, enterprise knowledge retrieval, and AI-driven content workflows.

Key Features of Claude 3.7 Sonnet

Balanced Performance and Cost

Optimized for production workloads that require quality comparable to flagship models without high inference costs.

Multimodal Support (Text + Vision)

Process text alongside images, diagrams, and scanned documents for richer applications.

Extended Context Window (200k Tokens)

Ideal for RAG, large document summarization, and multi-session conversational memory.

Low-Latency Responses (~500–800ms)

Fast enough for real-time UI integrations and interactive apps.

Anthropic’s Constitutional AI Alignment

Enhanced instruction-following, safety, and refusal handling for enterprise-grade deployments.

Use Cases for Claude 3.7 Sonnet

Customer Support and Chatbots

Deploy as a high-quality conversational AI that can reference large policy manuals and product documentation.

Knowledge Base Search and RAG

Integrate with vector databases to provide contextual, grounded answers from internal datasets.

Content Generation and Editing

Produce marketing copy, technical documentation, and creative drafts with minimal post-editing.

Data Extraction and Document Parsing

Extract entities, summarize contracts, or process visual documents with OCR-enabled workflows.

Code Assistance

Offer in-IDE support for code explanation, refactoring, and simple automation scripting.

Why Use Claude 3.7 Sonnet via AnyAPI

Unified API Across Models

Access Claude Sonnet alongside GPT, Gemini, Mistral, and DeepSeek in one endpoint.

No Vendor Lock-In to Anthropic

Run Claude-powered apps without being tied to Anthropic’s platform or quotas.

Scalable, Usage-Based Billing

Pay only for tokens used—ideal for unpredictable traffic or startup growth.

Production-Grade Infrastructure

Enjoy high uptime, low cold-start latency, and built-in monitoring.

Better Provisioning Than OpenRouter or HF Inference

Faster scaling and consistent model availability for live products.

Build Smarter, Faster with Claude 3.7 Sonnet

Claude 3.7 Sonnet is Anthropic’s sweet spot for cost, speed, and intelligence—perfect for developers scaling AI-powered products.

Integrate Claude 3.7 Sonnet via AnyAPI today—get your API key and launch in minutes.