The Ultimate API Solution for Scalable, Real-Time LLM Applications

The Mistral Nemo is a groundbreaking advancement in the world of language models. Developed by Mistral, this model is poised to redefine how developers and businesses interact with artificial intelligence. Positioned as a flagship offering in the Mistral model family, Nemo brings superior versatility and efficiency to generative AI systems, making it an essential tool for production use and real-time applications.

The significance of Mistral Nemo lies in its ability to facilitate robust AI-driven solutions across various industries, from SaaS and customer support to research and operational workflows. It provides a scalable, high-performance platform for creating, deploying, and managing AI applications with unprecedented ease and effectiveness.

Key Features of Mistral Nemo

Latency and Context Size

Mistral Nemo boasts remarkably low latency, ensuring rapid response times crucial for real-time applications. Its expanded context size allows for handling larger blocks of text, which enhances its functionality in comprehensive text processing and generation tasks.

Alignment and Safety

With refined alignment protocols, Nemo ensures ethical and aligned outputs, reducing the risk of generating biased or inappropriate content. This feature is particularly valuable for applications in sensitive areas such as legal advice and customer service.

Reasoning Ability

Nemo exhibits advanced reasoning capabilities, deftly processing complex queries and delivering precise answers. This makes it an invaluable tool for knowledge-intensive applications, such as legal tech and enterprise data management.

Language Support and Coding Skills

Supporting multiple languages, Nemo facilitates seamless integration in multilingual environments. Developers will also appreciate its exceptional coding skills, making Nemo a strong ally in the development of IDEs and AI-enhanced dev tools.

Real-Time Readiness and Deployment Flexibility

Engineered for real-time readiness, Mistral Nemo can be deployed rapidly, accommodating dynamic environments with ease. Its flexible integration options allow for seamless coupling with existing systems, enhancing developer experience and operational efficiency.

Use Cases for Mistral Nemo

Chatbots for SaaS and Customer Support

Leveraging Mistral Nemo, developers can create advanced chatbots capable of engaging customer interactions. Whether it's handling inquiries on a SaaS platform or providing 24/7 customer support, Nemo ensures that responses are swift and contextually appropriate.

Code Generation for IDEs and AI Dev Tools

With superior coding capabilities, Mistral Nemo automates code generation, simplifying the development process within IDEs. Its integration into AI dev tools accelerates the coding workflow, aiding developers in delivering robust applications ahead of schedule.

Document Summarization for Legal Tech and Research

Nemo excels at digesting and summarizing voluminous documents, offering streamlined insights into legal and research materials. This power augments the productivity of professionals needing rapid assimilation of complex information.

Workflow Automation for Internal Ops and CRM

Coupling Mistral Nemo with CRM systems and internal operations enhances automation and process efficiency. By automating repetitive tasks, Nemo frees up valuable resources, allowing teams to focus on strategic initiatives.

Knowledge Base Search for Enterprise Data and Onboarding

Nemos strength in data synthesis and retrieval enables efficient knowledge base searches. Enterprise data onboarding becomes seamless, facilitating quicker assimilation of new information for teams and new employees.

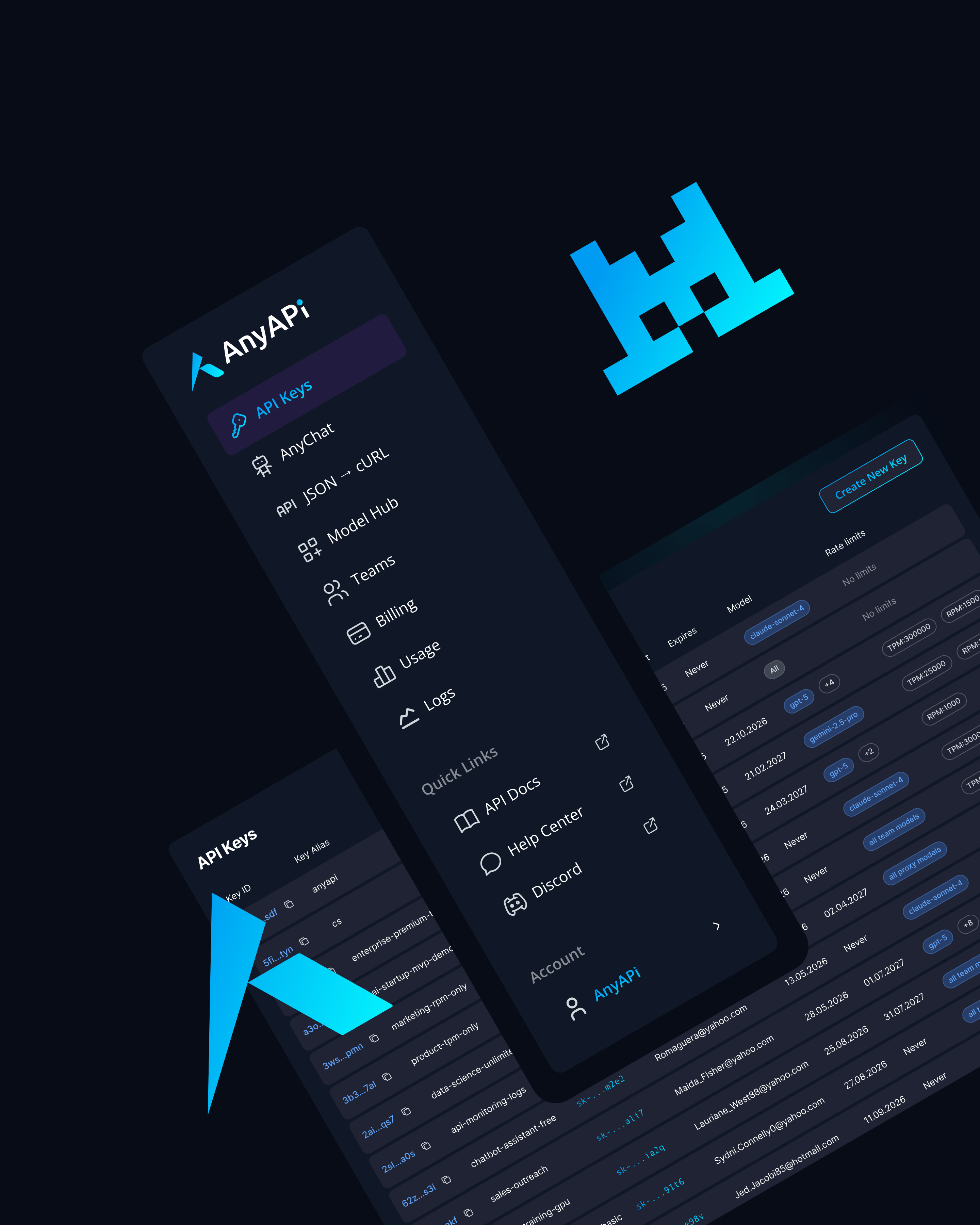

Why Use Mistral Nemo via AnyAPI.ai

Using Mistral Nemo through AnyAPI.ai amplifies its capabilities with several enhancements:

- Unified API Across Multiple Models: Seamlessly integrate Mistral Nemo alongside other models with a single, robust API framework.

- One-Click Onboarding, No Vendor Lock-In: Experience hassle-free onboarding with a no-lock-in policy, maximizing flexibility and control.

- Usage-Based Billing: Optimize costs with a billing system that scales with your usage, ideal for dynamic and growing applications.

- Developer Tools and Production-Grade Infrastructure: Benefit from a comprehensive suite of developer tools and infrastructure that supports large-scale AI deployment.

Compared to platforms like OpenRouter and AIMLAPI, AnyAPI.ai offers superior provisioning, unified access, and seamless support and analytics—making it the premier choice for scalable LLM deployment.

Start Using Mistral Nemo via API Today

In conclusion, Mistral Nemo represents a pinnacle in scalable, real-time language model applications. Its diverse capabilities and seamless integration options make it an ideal choice for startups, developers, and ML infrastructure teams. Begin your integration journey with Mistral Nemo through AnyAPI.ai and unlock the full potential of your AI projects.

Integrate Mistral Nemo via AnyAPI.ai and start building today. Sign up, get your API key, and launch in minutes.