Seamlessly Integrated Large Language Model API Enhancing Your Productivity in Real-Time Applications

Qwen Plus 0728 (thinking) is the latest improvement in AI-driven large language models. It represents a significant step forward for developers and businesses that want to incorporate AI into their workflows smoothly. Created by a top AI technology company, Qwen Plus 0728 (thinking) is known for its strong capabilities and flexibility, making it a suitable option for many industries. This model is designed to meet the needs of mid-tier to high-level production. It is essential for creating real-time applications and generative AI systems that need high accuracy and efficiency.

Key Features of Qwen Plus 0728 (thinking)

Low Latency and High Throughput

Qwen Plus 0728 (thinking) boasts minimal latency, facilitating real-time interactions and smooth user experiences, crucial for applications like chatbots and customer support systems.

Extended Context Window

With a substantial token capacity, developers can handle extensive context, allowing for more coherent and contextually aware outputs.

Advanced Reasoning and Alignment

The model showcases superior reasoning abilities and alignment with user directives, ensuring responses are both logical and contextually aligned.

Multilingual Support

Supporting an array of languages, Qwen Plus 0728 (thinking) is ideal for global deployments and applications that require bilingual or multilingual capabilities.

Scalable and Flexible Deployment

Whether deploying via cloud, on-premises, or hybrid environments, Qwen Plus 0728 (thinking) offers flexibility and scalability to accommodate various architectural needs, enhancing the developer experience.

Use Cases for Qwen Plus 0728 (thinking)

Chatbots for SaaS and Customer Support

Harness the power of Qwen Plus 0728 (thinking) to develop intelligent chatbots that deliver accurate, real-time responses, vastly improving customer interaction and satisfaction.

Code Generation for IDEs and AI Development Tools

Enhance your development workflow with automated code generation capabilities, providing developers with rapid solutions to complex coding challenges.

Document Summarization for Legal Tech and Research

Accelerate legal research and analysis with Qwen Plus 0728's ability to summarize expansive documents, making it a powerful tool for professionals requiring quick insights.

Workflow Automation for Internal Operations and CRM

Automate repetitive tasks and streamline processes within your CRM and internal operations, leading to increased productivity and reduced operational costs.

Knowledge Base Search for Enterprise Data and Onboarding

Improve onboarding experiences and enterprise data searches with a model that swiftly pulls relevant information from vast datasets.

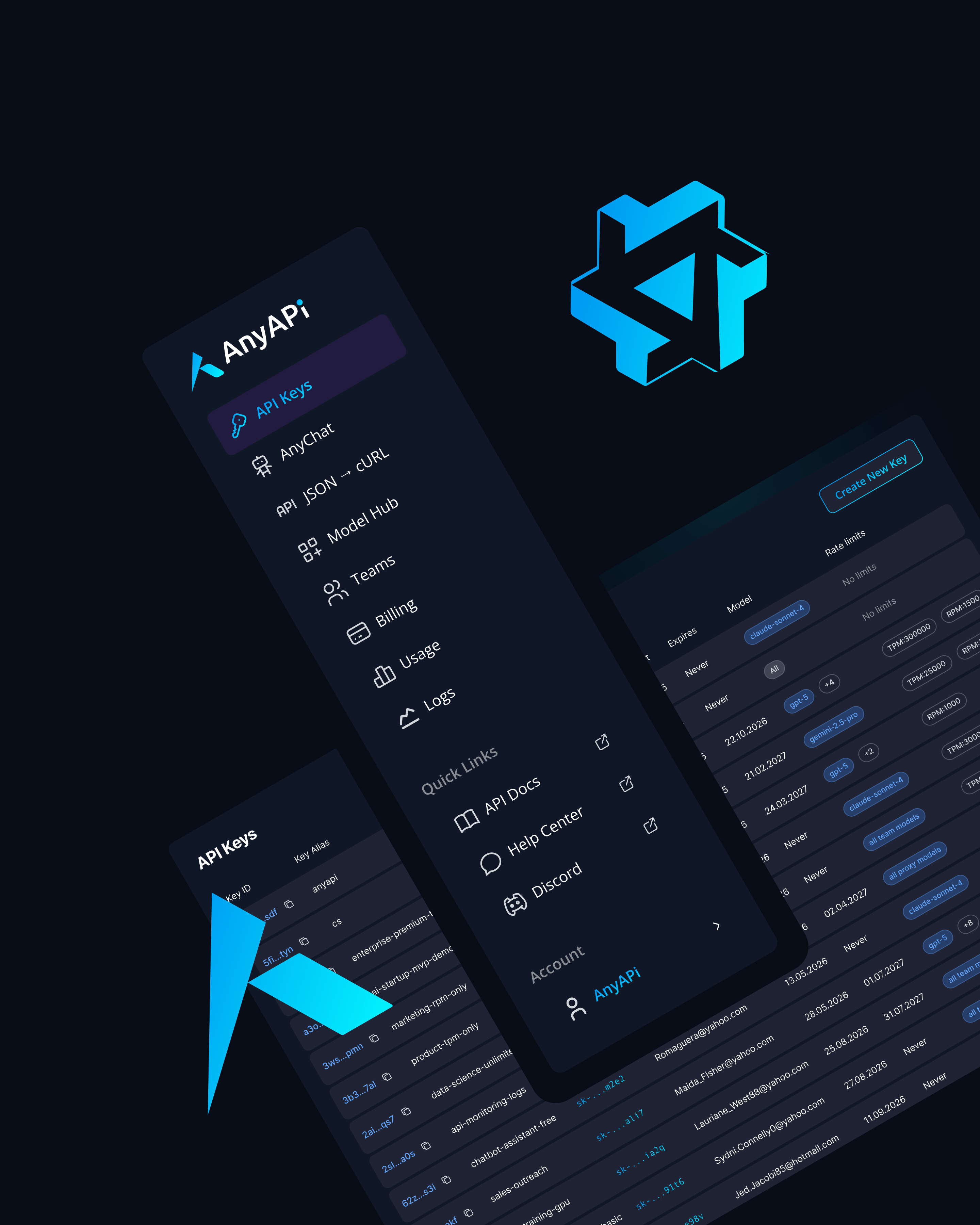

Why Use Qwen Plus 0728 (thinking) via AnyAPI.ai

By choosing to integrate Qwen Plus 0728 (thinking) through AnyAPI.ai, developers and businesses get a single API that allows easy integration across various models without being tied to one vendor. With one-click onboarding, usage-based billing, and strong developer tools, AnyAPI.ai provides a reliable infrastructure that can scale. It is notably more efficient than platforms like OpenRouter and AIMLAPI, offering unified access and detailed analytics to improve your AI usage.

Start Using Qwen Plus 0728 (thinking) via API Today

Qwen Plus 0728 (thinking) is a valuable addition to any developer’s toolkit. It offers exceptional efficiency and scalability for AI-driven projects. You can integrate Qwen Plus 0728 (thinking) through AnyAPI.ai and start building today.

Sign up to receive your API key and launch in minutes. This will speed up your development processes and help you stay ahead in the competitive AI landscape.