Lightweight, Aligned Open-Source LLM for Real-Time API Integration

ㅤ

Llama 3 8B Instruct is Meta’s compact instruction-tuned model from the Llama 3 family, designed for real-time generation, code support, and efficient language understanding. With just 8 billion parameters, it offers high responsiveness and strong instruction-following capabilities—while remaining fully open-weight and deployable in private or cloud environments.

ㅤ

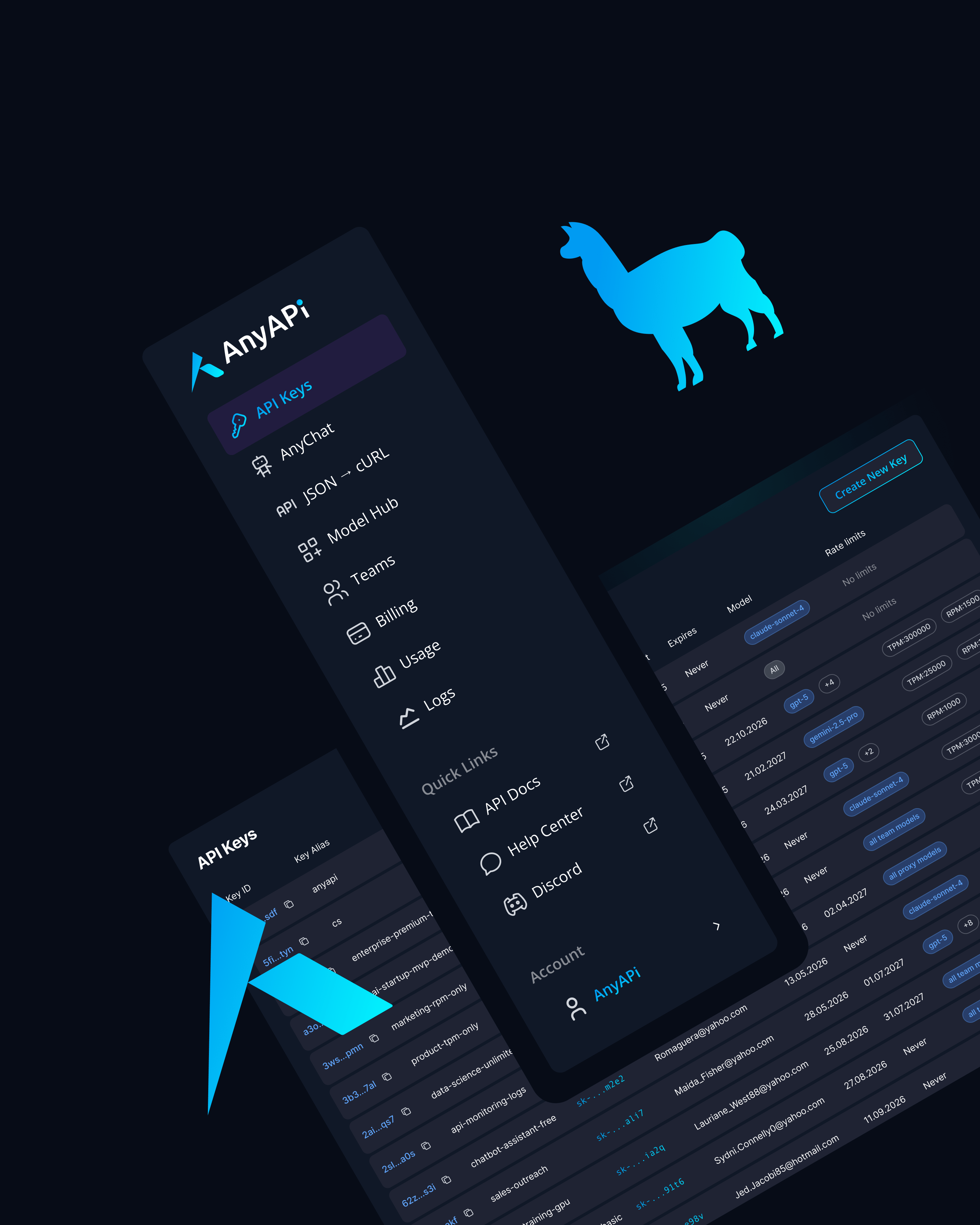

Perfect for cost-sensitive applications, edge deployments, and interactive AI agents, Llama 3 8B Instruct is available for use via API on AnyAPI.ai or for self-hosted deployment.

ㅤ

Key Features of Llama 3 8B Instruct

ㅤ

8 Billion Parameters

This lightweight LLM is optimized for fast inference and memory efficiency, with competitive instruction-following performance for its size.

Instruction-Tuned for Utility

Llama 3 8B Instruct has been fine-tuned to reliably follow commands and generate accurate, structured, and safe outputs across everyday tasks.

Open-Weight and Fully Customizable

Freely deployable under Meta’s license for use in on-premise, air-gapped, or commercial environments with no closed-vendor dependencies.

Efficient Multilingual Output

Handles tasks in 20+ languages, including English, Spanish, French, German, and Arabic, with strong generalization for content creation and chat.

Strong Code Assistance for Lightweight Use

Supports multi-language code generation, including Python, JavaScript, and HTML, ideal for dev tools, snippets, and small IDE assistants.

ㅤ

Use Cases for Llama 3 8B Instruct

ㅤ

Chatbots and Conversational Interfaces

Deploy fast, responsive AI chat agents that can handle instructions, summaries, Q&A, and helpdesk prompts in real time.

ㅤ

Mobile and Edge AI Deployment

Run Llama 3 8B in lightweight environments like mobile apps, IoT devices, or local servers where performance per watt matters.

ㅤ

Coding Helpers in Dev Environments

Embed the model in lightweight IDE plugins or web-based tools to generate boilerplate code, comments, and debugging help.

ㅤ

Content Generation for SaaS Apps

Use for blog intro drafting, email templates, summaries, and meta text across marketing, CMS, and internal tools.

ㅤ

Multilingual Utility Bots

Provide real-time, multilingual AI support in global-facing platforms, with aligned and low-latency outputs.

Why Use Llama 3 8B Instruct via AnyAPI.ai

ㅤ

Managed API for Open-Source LLMs

Use Llama 3 8B without running your own servers—access via a production-ready endpoint through AnyAPI.ai.

ㅤ

Unified API with Proprietary Models

Benchmark or combine Llama with GPT, Claude, and Gemini models using one SDK and simplified billing.

ㅤ

No Lock-In, Full Control

Maintain the freedom to switch between hosted or self-hosted models without vendor constraints.

ㅤ

Cost-Effective Inference

Low token costs and fast latency make Llama 3 8B ideal for experimentation, testing, and large-scale deployment.

ㅤ

Stronger DevOps Tools Than OpenRouter

AnyAPI.ai includes logs, analytics, usage metrics, and scalable provisioning beyond what most open LLM endpoints provide.

ㅤ

Use Llama 3 8B Instruct for Fast, Aligned AI at the Edge

Llama 3 8B Instruct brings together open access, speed, and instruction-following reliability—ideal for fast, flexible AI deployments.

Access Llama 3 8B Instruct via AnyAPI.ai or deploy it yourself with full model control.

Sign up now and start building AI features in minutes.