Explore DeepSeek V3.1: The State-of-the-Art AI Language Model

DeepSeek V3.1 is the latest powerful offering by the innovator AnyAPI.ai in the realm of AI language models. This model serves as a versatile, mid-tier solution designed to cater to a wide variety of real-time applications and generative AI systems. It sits seamlessly between entry-level and flagship models, providing a robust balance of performance and versatility. DeepSeek V3.1’s API integration makes it an optimal choice for production use, facilitating developers to craft highly responsive applications in fields such as workflow automation, customer support, and enterprise data management.

Key Features of DeepSeek V3.1

Low Latency Performance

Designed to provide real-time responses, DeepSeek V3.1 boasts one of the lowest latencies in its class, enabling smooth user interactions.

Extended Context Size

With a substantial context window, this model supports more extensive dialogue and complex queries, enhancing the overall user experience with its comprehensive understanding abilities.

Alignment and Safety

Ensuring ethical and safe AI interactions is a priority. DeepSeek V3.1 integrates advanced alignment strategies, minimizing biased outputs while promoting reliable results.

Superior Multilingual Support

Offering language support across multiple tongues, this model enables global solutions with ease, reaching diverse user bases without the need for additional tools.

Coding Proficiency

DeepSeek V3.1 stands out with its superior ability to facilitate code generation, aiding developers in various Integrated Development Environments (IDEs) with efficiency and accuracy.

Use Cases for DeepSeek V3.1

Chatbots for SaaS and Customer Support

Engineered to handle diverse customer queries efficiently, DeepSeek V3.1 enhances user satisfaction by reducing wait times and improving resolution rates in SaaS environments and customer service.

Code Generation for IDEs and AI Development Tools

DeepSeek V3.1 boasts automated code generation capabilities, streamlining development workflows and augmenting productivity for software engineers and developers.

Document Summarization in Legal Tech and Research

The model's powerful processing enables it to automate the summarization of lengthy documents, making it indispensable for legal practitioners and researchers handling extensive data.

Workflow Automation for Internal Operations

DeepSeek V3.1 revolutionizes internal operations by automating repetitive tasks, improving CRM systems, and generating insightful product reports.

Enterprise Knowledge Base Search

Providing accurate and timely search results, DeepSeek V3.1 optimizes enterprise-level data searches, aiding in the smooth onboarding of new employees and enhancing knowledge retrieval efficiency.

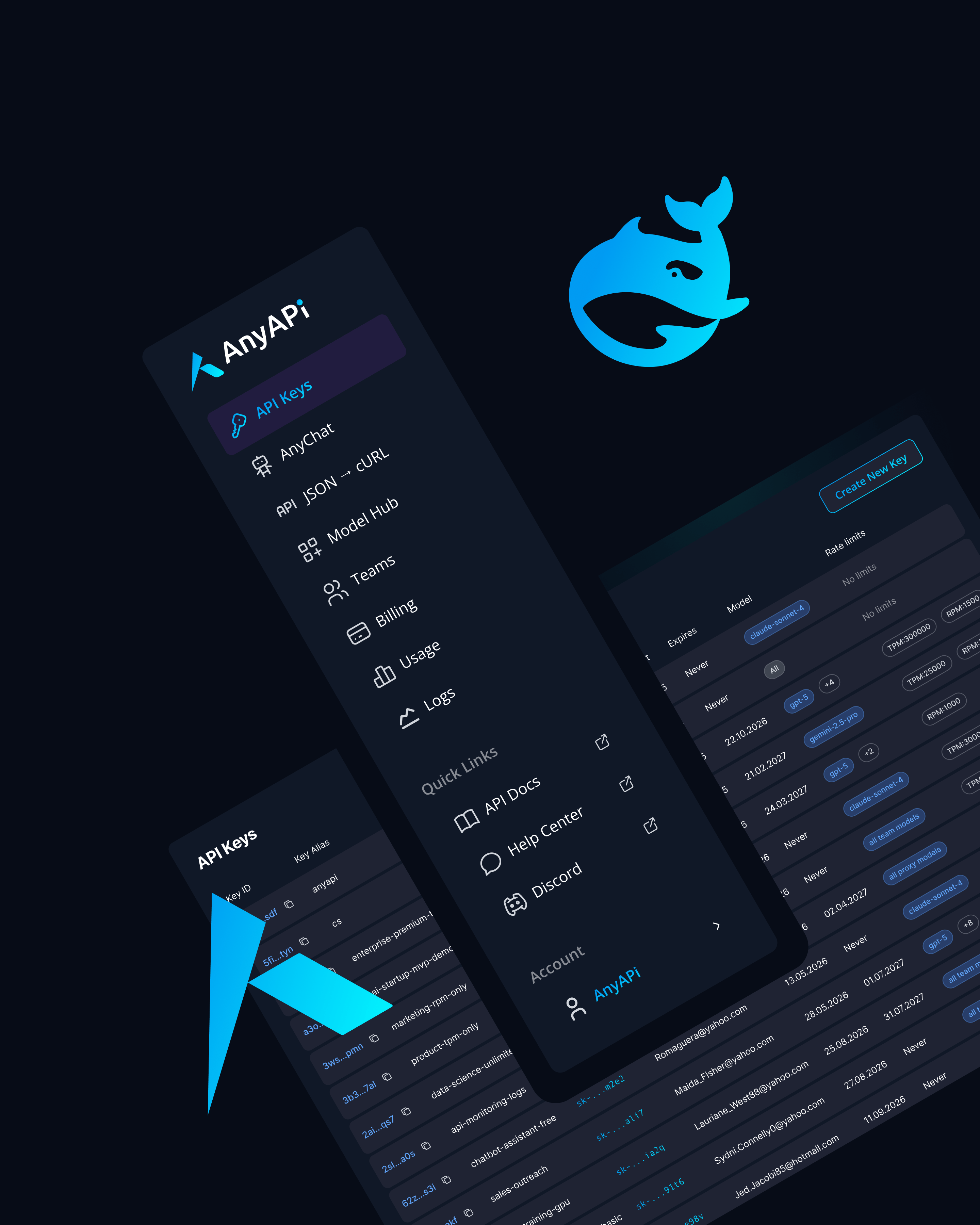

Why Use DeepSeek V3.1 via AnyAPI.ai

AnyAPI.ai transforms your experience with DeepSeek V3.1 through multiple benefits:

- Unified API Access: Connect not just with DeepSeek but other top LLMs seamlessly via a singular platform.

- One-Click Onboarding: Simplified user experience with no vendor lock-in providing easy setup and flexible usage.

- Usage-Based Billing: Only pay for what you use, ensuring cost efficiency.

- Superior Developer Tools: Access robust tools and production-grade infrastructure for scalable applications.

- Comprehensive Support and Analytics: Distinguished from platforms like OpenRouter and AIMLAPI, providing unparalleled provisioning and support.

Start Using 'DeepSeek V3.1' via API Today

Unlock the full potential of DeepSeek V3.1 for your business or development projects. Whether you're a startup, developer, or part of an ML team, integrating DeepSeek V3.1 via AnyAPI.ai can transform how you deploy AI solutions.

Sign up, get your API key, and launch large-scale applications within minutes. Integrate DeepSeek V3.1 via AnyAPI.ai and start building today.