Ultra-Light Open-Weight LLM for Fast, Low-Cost API and Local Use

Mistral Tiny is a projected ultra-lightweight open-weight model in the Mistral AI family, targeting the lower bound of LLM size/performance tradeoffs. While not yet officially released as a standalone product, the name "Mistral Tiny" has become a shorthand for compact, fast-inference models intended for edge devices, automation scripts, and real-time chat interfaces.

Expected to fit between 1B–3B parameters, Mistral Tiny would provide cost-effective, open-access inference for high-frequency, low-latency workloads.

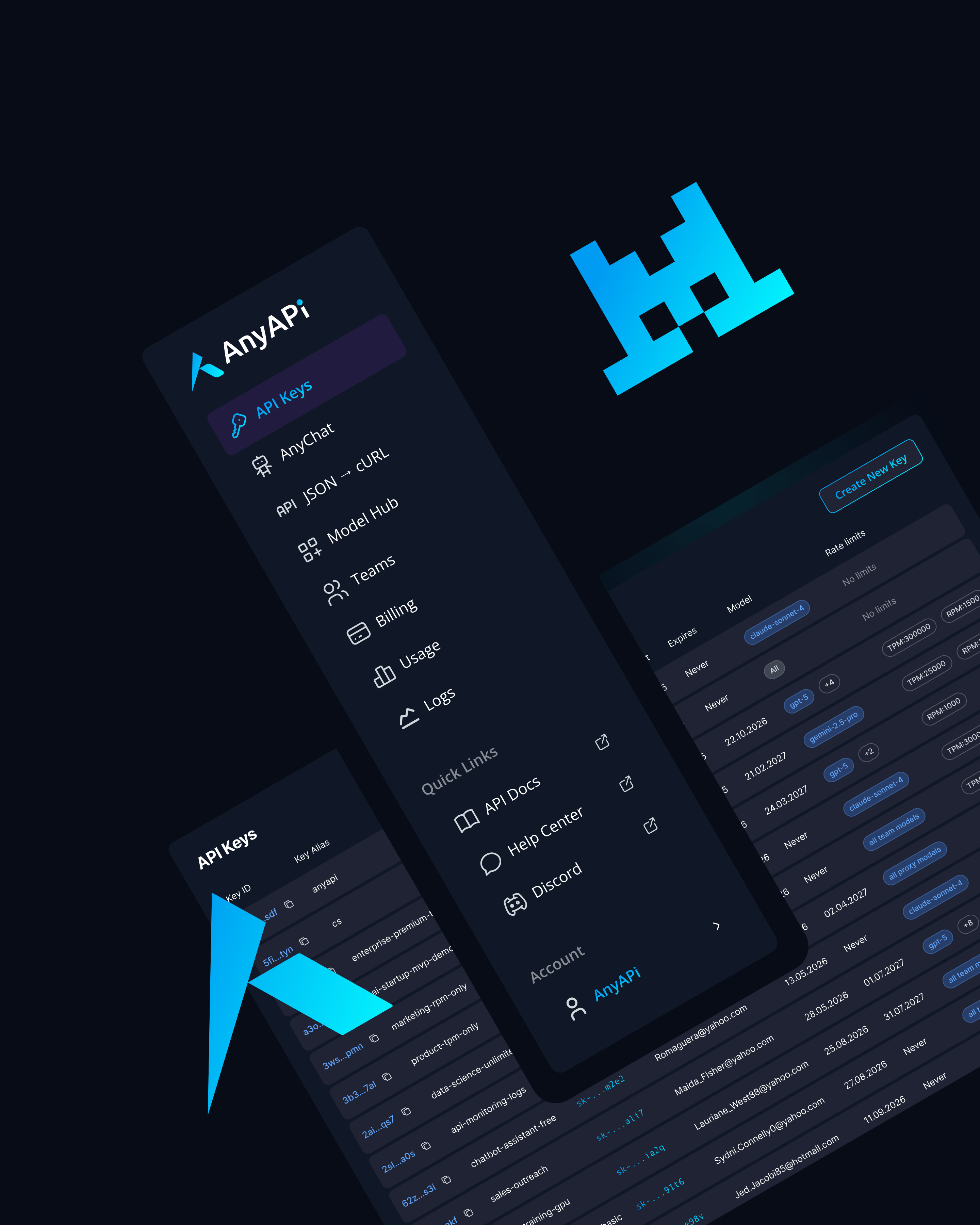

Through AnyAPI.ai, developers can explore early-access and substitute models (e.g., distilled versions or small-variant models) for Mistral Tiny-level performance via a unified API.

Key Features of Mistral Tiny

Sub-4B Parameter Footprint

Designed for environments with limited compute - ideal for mobile, browser, and embedded contexts.

Low-Latency Inference (~100–200ms)

Blazing-fast performance makes it suitable for synchronous UIs and API chains.

Open License Expected (Apache 2.0 or MIT)

Like other Mistral models, expected to be permissively licensed for modification and redistribution.

Efficient Token Usage

Optimized for short prompts, config generation, system automation, and form-based UIs.

Multilingual Output (Basic)

Supports basic generation and classification in English and select global languages.

Use Cases for Mistral Tiny

CLI Agents and Developer Tools

Embed language interfaces in command-line workflows, code tools, and build scripts.

IoT and Edge-Based AI

Deploy on-device in smart appliances, AR glasses, or vehicle systems where low power is critical.

Browser-Based LLM Interfaces

Power extensions, plugins, and JS-based AI modules in browser environments.

Email and Template Automation

Draft short replies, generate form text, or fill structured templates programmatically.

Low-Resource LLM Experiments

Use Mistral Tiny for prototyping instruction tuning or quantization techniques.

Why Use Mistral Tiny via AnyAPI.ai

Unified API Access to Small and Large Models

Explore Mistral Tiny-alternatives alongside full-size Mistral, GPT, Claude, and more.

Pay-As-You-Go Access to Small Inference

Perfect for high-volume, low-cost LLM workloads like customer messaging or text tagging.

Preview Access to Distilled or Quantized Models

Try early-stage versions of Tiny-like models or distill your own with full control.

Zero Setup for Edge-Use Emulation

Run Tiny-level models without setting up local GPU or embedded systems.

Faster and More Flexible Than Hugging Face Hosted UI

Access production-ready endpoints with full observability, logs, and latency metrics

Use Lightweight LLMs at Scale with Mistral Tiny

Mistral Tiny promises to bring ultra-fast, ultra-efficient language AI to edge, automation, and everyday tools.

Start exploring Tiny-tier models today with AnyAPI.ai - no setup, instant scale, full flexibility.