Discover the Power and Efficiency of Mistral Small 3.2 24B: Scalable Real-Time LLM API Access

Why Mistral Small 3.2 24B Matters

The Mistral Small 3.2 24B represents a cutting-edge advancement in the large language model (LLM) ecosystem. Developed by Mistral, this model is intricately designed to balance efficiency with performance, offering a substantial leap in real-time applications and generative AI systems. Positioned as a mid-tier yet robust option, Mistral Small 3.2 24B is essential for developers looking to integrate seamless and potent LLM capabilities into their tools and applications.

In the fast-paced world of AI, the Mistral Small 3.2 24B promises to streamline production use and support innovative, data-driven solutions. This model ensures quality performance without the high demands of flagship models, making it a strategic choice for startups and teams aiming for scalable, reliable AI development.

Key Features of Mistral Small 3.2 24B

Latency and Real-Time Readiness

Mistral Small 3.2 24B stands out with its low latency, making it perfect for applications requiring real-time data processing. This feature ensures that tools like customer support chatbots and SaaS applications function seamlessly, providing users with instant responses.

Context Size and Flexibility

With an impressive context window, the Mistral Small 3.2 24B supports intricate data interactions over a lengthy conversation, providing unmatched performance in applications like workflow automation and legal document summarization.

Alignment and Safety

Ensuring the highest standards, Mistral Small 3.2 24B includes refined alignment and safety parameters, offering peace of mind for developers deploying it in sensitive environments such as customer interactions and enterprise data management.

Advanced Language Support

This model supports multiple languages, making it a versatile tool for internationally-focused applications. Whether your operations are centered in North America, Europe, or beyond, Mistral Small 3.2 24B can be a reliable asset.

Developer Experience

With flexible deployment options and a conducive environment for development, Mistral Small 3.2 24B offers an engaging experience for developers looking to maximize productivity and innovation in their projects.

Use Cases for Mistral Small 3.2 24B

Chatbots for SaaS and Customer Support

Deploying chatbots for seamless customer interaction has never been easier. Mistral Small 3.2 24B's quick processing and language support make it ideal for improving customer satisfaction and operational efficiency.

Code Generation for IDEs and AI Dev Tools

Enhance coding efficiency and reduce manual errors with this model's robust code generation capabilities, supporting various IDEs and developer tools aiming to advance programming productivity.

Document Summarization in Legal Tech and Research

Simplify complex document review processes. Mistral Small 3.2 24B's comprehensive language capabilities allow for precise document summarization, streamlining legal tech operations and academic research.

Workflow Automation for Internal Ops and CRM

Transform your workflow with automated processes using Mistral Small 3.2 24B, optimizing internal operations and customer relationship management tasks to increase overall business efficiency.

Knowledge Base Search for Enterprise Data and Onboarding

Empower your organization with enhanced knowledge management solutions, accessing enterprise data swiftly and improving onboarding processes.

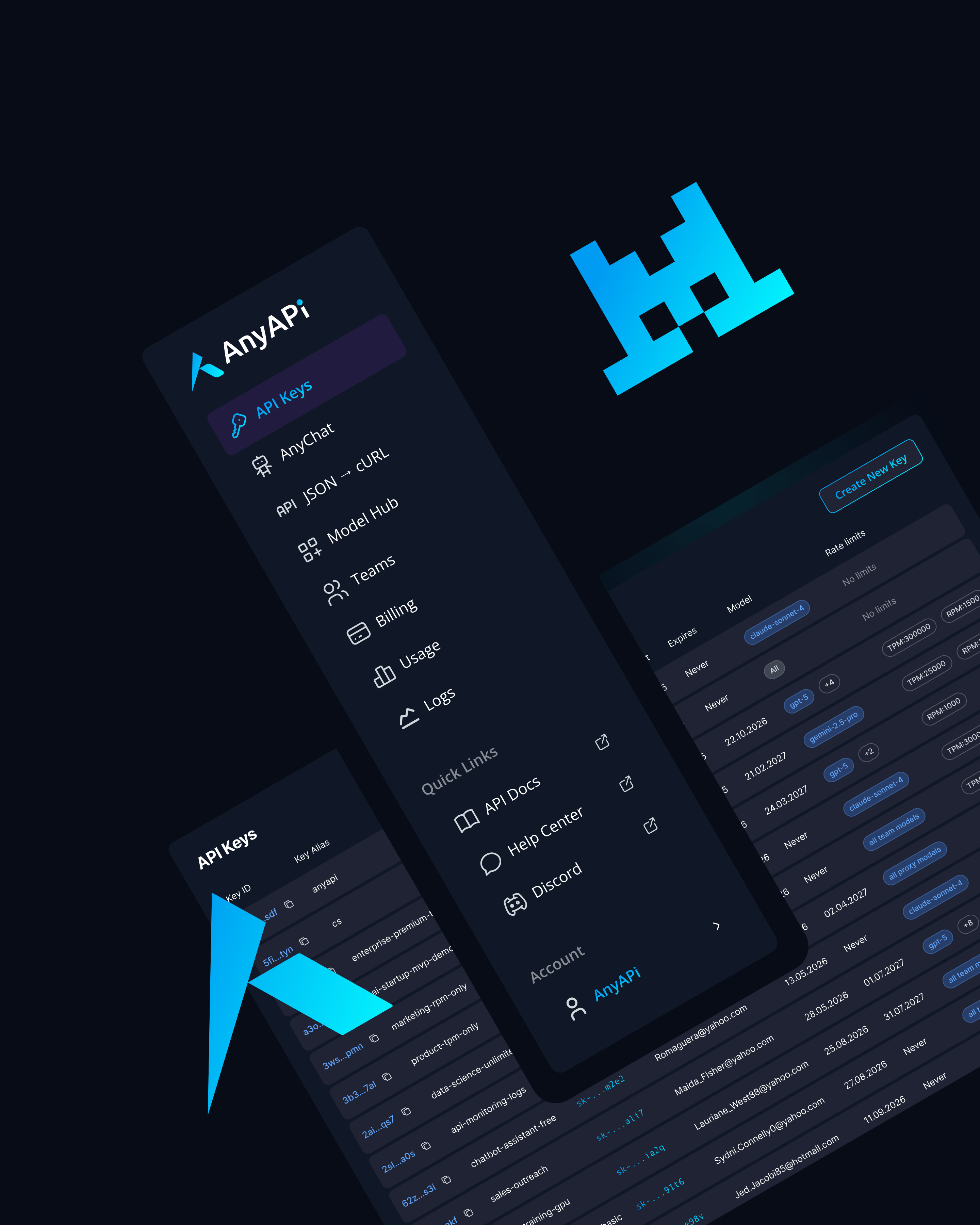

Why Use Mistral Small 3.2 24B via AnyAPI.ai

Harnessing the capabilities of Mistral Small 3.2 24B through AnyAPI.ai provides access to a unified API layer across multiple models. This simplifies integration, ensuring quick onboarding and eliminating vendor lock-in issues. With usage-based billing, developers can optimize costs as they scale their AI solutions.

AnyAPI.ai elevates access through enhanced provisioning, unified support, and comprehensive analytics, distinguishing itself with superior production-grade tools and infrastructure in comparison to platforms like OpenRouter and AIMLAPI.

Start Using Mistral Small 3.2 24B via API Today

Accelerate your projects and innovation with Mistral Small 3.2 24B through AnyAPI.ai. Perfect for startups and teams, this model offers the scalability and performance your AI applications demand. Sign up, get your API key, and launch your integration in minutes.