High-Performance Open LLM for Advanced Reasoning and Code Generation

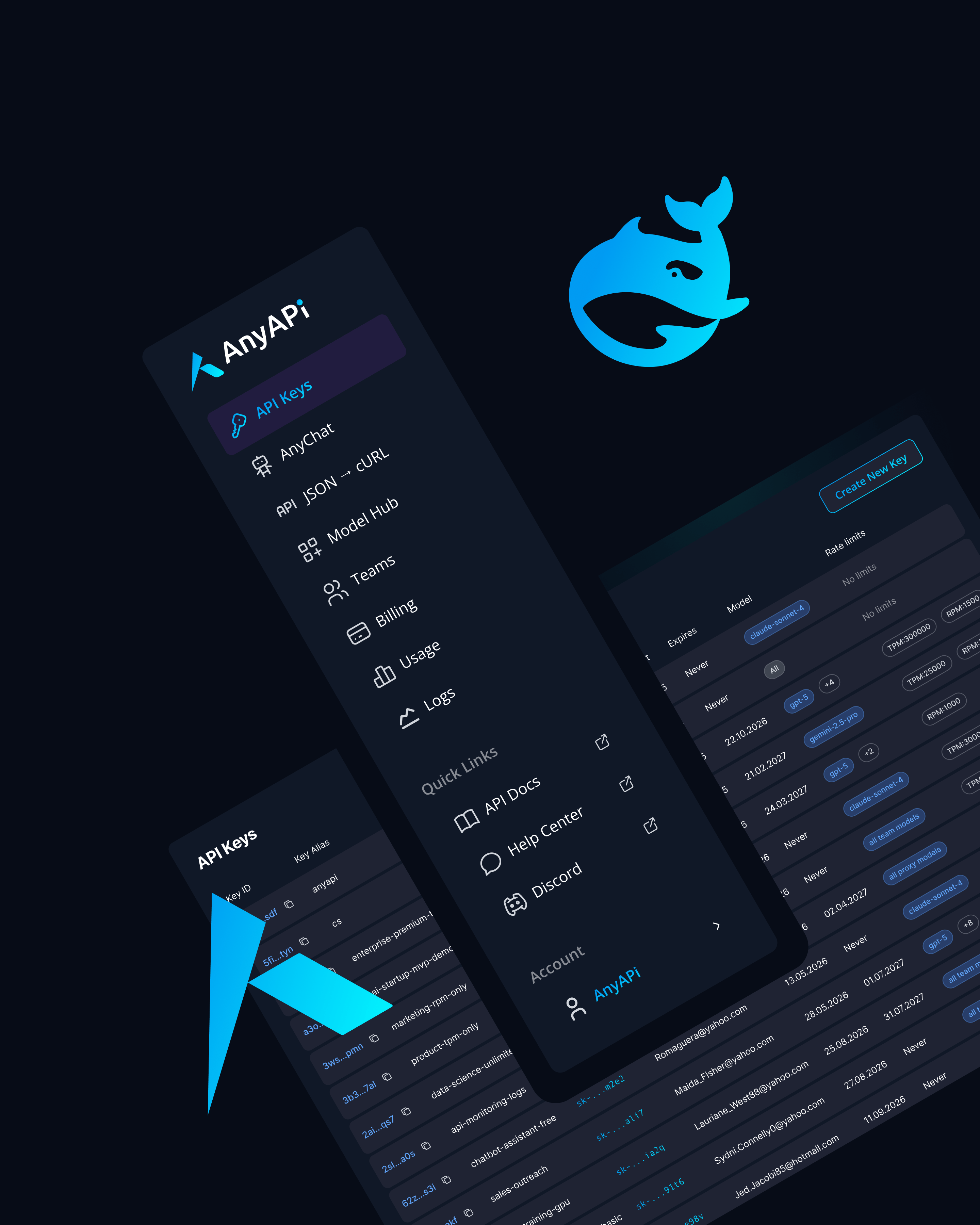

DeepSeek V3.1 Terminus is the next step in DeepSeek’s open-weight LLM series. It is designed for fast inference, better reasoning, and structured code generation. This release builds on the success of DeepSeek V3 and offers improvements in logic handling, context retention, and understanding multiple languages. You can access DeepSeek V3.1 Terminus through AnyAPI.ai.

It provides strong performance and flexible access through a single unified API for developers, researchers, and AI infrastructure teams.

Key Features of DeepSeek V3.1 Terminus

Optimized Transformer Architecture

Enhanced reasoning depth and faster inference with improved token routing and attention scaling.

Extended Context Window (64k Tokens)

Handles longer dialogues, documents, and multi-step tasks for enterprise workloads.

Open-Weight Availability

Released under a permissive license, allowing private deployments and fine-tuning.

Advanced Code and Logic Reasoning

Performs structured problem-solving, code generation, and mathematical reasoning with high accuracy.

Multilingual and Domain Adaptable

Strong performance across English, Chinese, and major global languages.

Use Cases for DeepSeek V3.1 Terminus

Enterprise AI Assistants

Power knowledge assistants, internal copilots, and data summarization tools.

Software Development and DevOps

Generate, refactor, and document code across multiple languages and frameworks.

Data Analysis and Research

Summarize technical documents and reason over structured datasets.

Automation and Agent Systems

Integrate into workflow engines, AI agents, and API orchestration layers.

Education and Training Platforms

Deliver multilingual tutoring and code-learning support systems.

Why Use DeepSeek V3.1 Terminus via AnyAPI.ai

Unified Access to Open and Proprietary Models

Run DeepSeek alongside GPT, Claude, Gemini, and Mistral through one API key.

No GPU Setup Required

Query instantly without hosting or managing local infrastructure.

Usage-Based Billing

Pay-as-you-go access with transparent, usage-based pricing.

Enterprise Reliability and Monitoring

Includes observability tools, rate limits, and production-grade uptime.

Better Provisioning Than HF Inference or OpenRouter

Optimized for high throughput, low latency, and stable response quality.

Scale Open Reasoning AI with DeepSeek V3.1 Terminus

DeepSeek V3.1 Terminus offers excellent reasoning, clear weight transparency, and scalable performance. It is ideal for production AI assistants, developer tools, and data automation.

You can integrate DeepSeek V3.1 Terminus through AnyAPI.ai. Sign up, get your API key, and deploy enterprise-ready open AI today.