Anthropic’s Fastest Lightweight LLM for Real-Time Applications

Claude 3.5 Haiku (2024-10-22 release) is Anthropic’s latest iteration of its ultra-fast, cost-efficient language model. Designed for real-time apps, chatbots, and automation workflows, Haiku combines speed with safety alignment, making it the go-to model for startups, SaaS providers, and enterprise tools that demand both responsiveness and reliability.

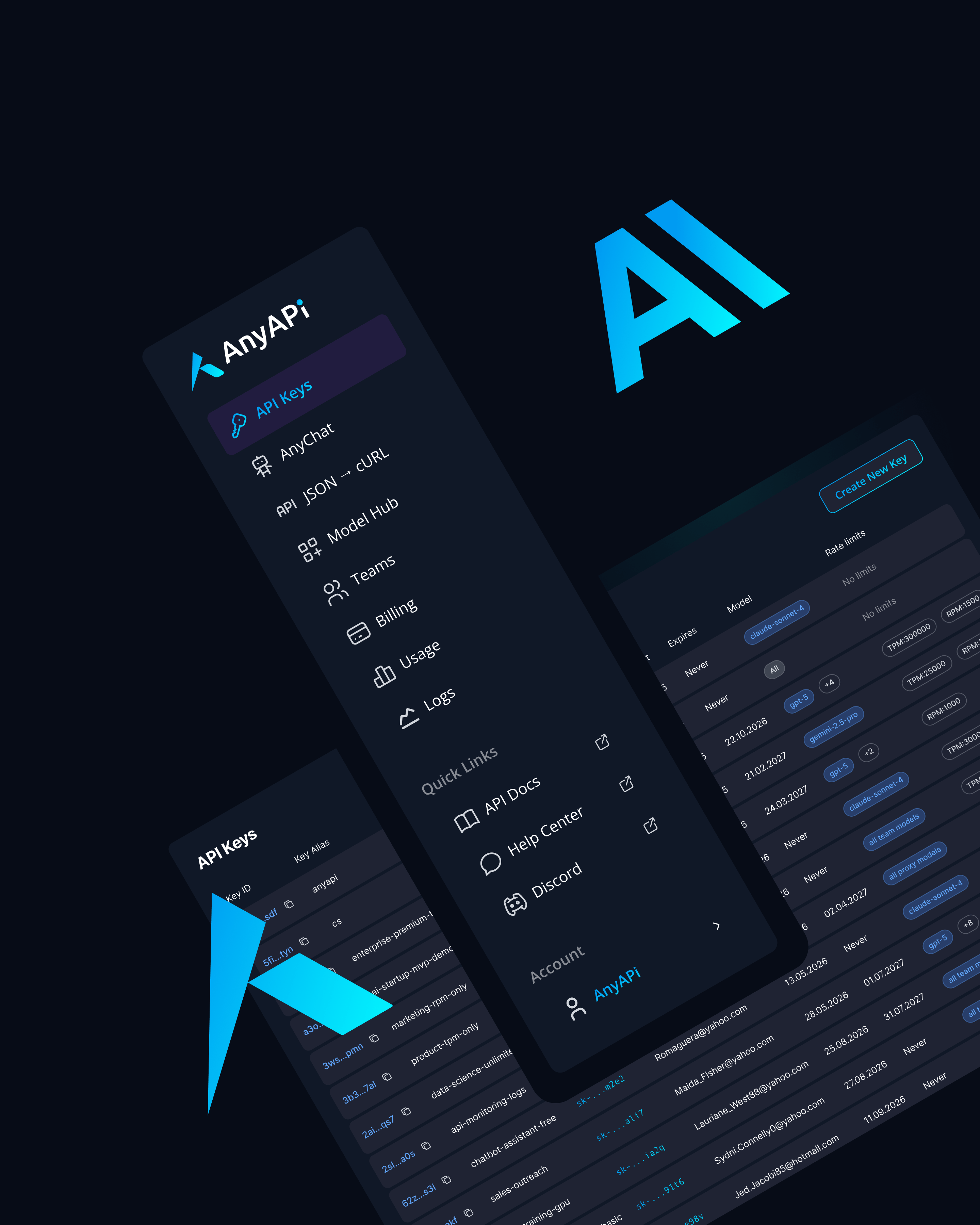

Available via AnyAPI.ai, Claude 3.5 Haiku delivers production-grade performance without requiring direct Anthropic account access, ensuring faster integration and scale.

Key Features of Claude 3.5 Haiku (2024-10-22)

Ultra-Fast Inference (~150–300ms)

Handles high-volume requests in real time, ideal for mobile apps and live chat systems.

200k Token Context Window

Process large chat histories, technical documentation, or multi-part workflows.

Lightweight, Cost-Efficient Deployment

Optimized for affordability, enabling frequent, low-cost queries at scale.

Multilingual Support (25+ Languages)

Well-suited for global SaaS platforms and multilingual customer interactions.

Anthropic’s Constitutional AI Alignment

Ensures safer outputs with fewer refusals, supporting enterprise trust and compliance.

Use Cases for Claude 3.5 Haiku

Customer Support Chatbots

Provide instant, context-aware responses across large knowledge bases.

Internal Workflow Automation

Streamline ticketing, reporting, and CRM updates with natural language automation.

High-Frequency SaaS Applications

Embed Haiku into productivity tools, dashboards, and communication platforms.

Knowledge Base Summarization

Quickly summarize product manuals, compliance docs, or onboarding material.

Multilingual Interfaces

Support users in 25+ languages for customer service and global deployments.

Why Use Claude 3.5 Haiku via AnyAPI.ai

No Anthropic Account Needed

Access Claude models instantly without vendor lock-in.

Unified API Across All Major Models

Integrate Claude, GPT, Gemini, Mistral, and DeepSeek with a single API key.

Usage-Based Billing

Pay only for tokens consumed—ideal for scaling startups and high-traffic apps.

Production-Ready Infrastructure

Low-latency endpoints with observability, logging, and enterprise-grade reliability.

Faster and More Reliable Than OpenRouter or HF Inference

Better provisioning and scaling for live, real-world deployments.

Use Claude 3.5 Haiku for Fast, Reliable Real-Time AI

Claude 3.5 Haiku (2024-10-22) offers unmatched latency and cost efficiency, making it the best choice for startups and enterprises deploying AI at scale.

Integrate Claude 3.5 Haiku via AnyAPI.ai - sign up, get your API key, and start building today.