The Most Scalable Mid-Tier AI Language Model for Real-Time Applications

Devstral Medium is a scalable large language model (LLM) created by Mistral AI. It aims to meet the rising needs of real-time applications and generative AI systems. As part of the mid-tier range of the Mistral family, this model offers an efficient solution for those wanting to incorporate strong AI capabilities without the high resource requirements of top-tier models. Its balance of performance and scalability makes it suitable for production use, providing developers and companies with a dependable tool for various applications.

Key Features of Devstral Medium

Optimal Latency and Context Size

Devstral Medium offers competitive latency, ensuring rapid response times, vital for real-time applications such as chatbots and live customer support systems. With a generous context window, it can handle complex queries and maintain continuity in extended interactions.

Enhanced Alignment and Safety

Users can trust Mistral: Devstral Medium for safe deployment across varying domains, thanks to its superior alignment protocols. This ensures interactions are appropriate, accurate, and ethically managed.

Robust Reasoning and Coding Skills

Designed to cater to diverse tasks, this model excels in logical reasoning and programming tasks, making it a valuable asset in code generation and software development scenarios.

Multilingual Support

With extensive language support, Devstral Medium can be deployed in global settings, enhancing capabilities across multilingual platforms and applications.

Real-Time Readiness and Developer Experience

The model's architecture emphasizes deployment flexibility, ensuring developers can easily integrate it into existing workflows and infrastructures. Its user-friendly design improves the developer experience, facilitating rapid deployment and scaling.

Use Cases for Devstral Medium

Chatbots for SaaS and Customer Support

Devstral Medium enables companies to deploy highly interactive chatbots, capable of understanding and responding to user queries with precision, perfect for SaaS platforms and customer service applications.

Code Generation for IDEs and AI Dev Tools

Developers can leverage Devstral Medium for enhanced code generation, integrating it into IDEs to provide rapid code suggestions and error corrections, thus boosting development productivity.

Document Summarization for Legal Tech and Research

The model's ability to succinctly summarize lengthy documents makes it invaluable in legal tech and research settings, where time-efficient processing of information is crucial.

Workflow Automation for Internal Ops and CRM

Streamline internal operations and customer relationship management with Devstral Medium's automation capabilities, reducing manual processing time and improving data handling efficiency.

Knowledge Base Search for Enterprise Data and Onboarding

This model enhances the search and retrieval of information from extensive knowledge bases, assisting enterprises with data navigation and employee onboarding processes.

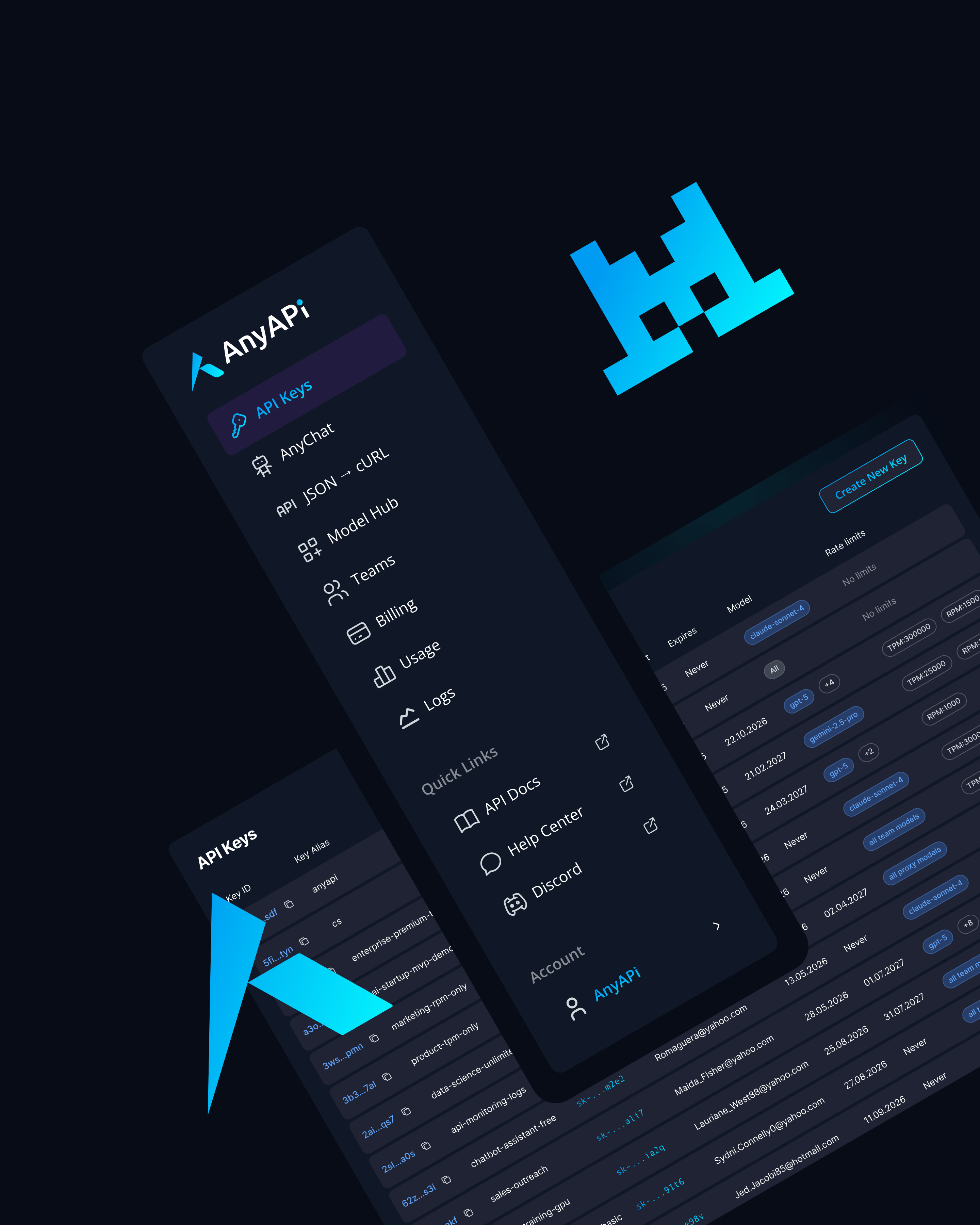

Why Use Devstral Medium via AnyAPI.ai

By accessing Devstral Medium through AnyAPI.ai, developers gain a unified API platform that makes it easier to integrate different language models. Enjoy smooth onboarding without being tied to a vendor, usage-based billing that matches costs with application use, and strong developer tools that improve production-grade infrastructure.

Unlike competitors like OpenRouter and AIMLAPI, AnyAPI.ai provides better provisioning, analytics, and support, leading to quicker and more reliable deployments.

Start Using Devstral Medium via API Today

Harness the potential of Devstral Medium and elevate your AI-driven applications by integrating it through AnyAPI.ai. Whether you're a startup looking to scale your technology or a developer seeking robust tools, this model offers unparalleled benefits.

Sign up, get your API key, and launch your next innovative solution in minutes.