Unleash the Power of API-Driven AI with DeepSeek V3.1 Terminus (exacto) for Scalable, Real-Time Success

Introducing the DeepSeek V3.1 Terminus (exacto), a state-of-the-art large language model that changes how developers and businesses add AI features to their applications. Made by top experts in AI, this model is part of a respected lineup that combines precision with scalable real-time processing. As a leading model in the LLM space, DeepSeek V3.1 Terminus (exacto) is ready for production in various industries, making it especially useful for those looking to use generative AI in real-time applications.

This model stands out from others by striking a great balance between performance and ease of use. It is an ideal choice for startups developing AI-based products, ML and data infrastructure teams, and no-code or low-code integrators.

Key Features of DeepSeek V3.1 Terminus (exacto)

Latency Optimization

DeepSeek V3.1 Terminus (exacto) is engineered for low latency responses, crucial for developers building applications that require real-time interactions. It processes inputs with unprecedented speed, ensuring smooth user experiences.

Extended Context Size

With an expansive context window, this model supports complex dialogue and comprehensive content generation without losing coherence, ideal for detailed problem-solving and content creation tasks.

Alignment and Safety

Safety is a top priority for DeepSeek V3.1 Terminus (exacto). It is aligned with stringent ethical standards and enhanced safety protocols, making it reliable in diverse use cases while minimizing risk.

Enhanced Reasoning Abilities

This model excels in reasoning and decision-making capabilities, allowing developers to build apps with nuanced understanding and contextual insight, from automated customer service to intelligent personal assistants.

Multilingual Support

DeepSeek V3.1 Terminus (exacto) supports a wide range of languages, facilitating global applications and making it a versatile tool for multi-language projects, broadening your application’s reach.

Developer Experience and Deployment Flexibility

Designed with developers in mind, it offers flexible deployment options, whether operating in cloud environments or on-premise, ensuring a smooth integration process.

Use Cases for DeepSeek V3.1 Terminus (exacto)

Chatbots for SaaS and Customer Support

Leverage its real-time processing capabilities to develop intuitive chatbots that enhance customer service, whether in SaaS applications or customer support centers, providing instant solutions to user inquiries.

Code Generation for IDEs and AI Dev Tools

Use DeepSeek V3.1 Terminus (exacto) to power code generation in IDEs, enabling developers to automate repetitive coding tasks and robustly prototype new solutions, enhancing productivity.

Document Summarization in Legal Tech and Research

Automate the summarization of large volumes of text, such as legal documents or research papers, making it easier to distill critical insights quickly and accurately, saving valuable time and resources.

Workflow Automation for Internal Ops and CRM

Implement this model to streamline and automate workflows in CRM systems or internal operations, ensuring efficiency and reduced overhead through intelligent task management.

Knowledge Base Search for Enterprise Data and Onboarding

Deploy it as a smart search assistant within knowledge bases, making data retrieval intuitive and fast, which is essential for onboarding and training within enterprises.

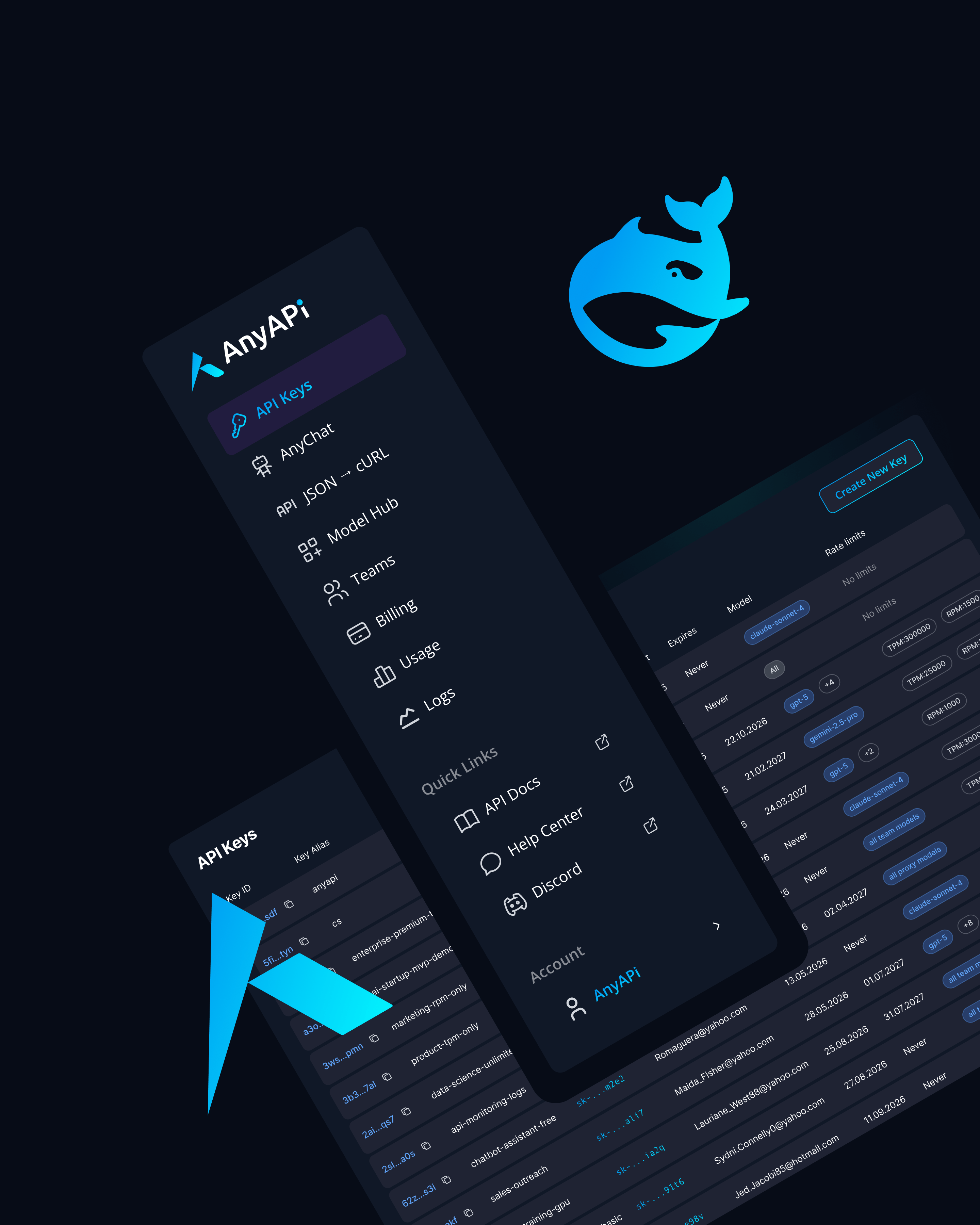

Why Use DeepSeek V3.1 Terminus (exacto) via AnyAPI.ai

Choosing AnyAPI.ai for deploying DeepSeek V3.1 Terminus (exacto) amplifies its value through:

- Unified API across Multiple Models

Streamline integration by accessing various models through a single API, simplifying development.

- One-click Onboarding, No Vendor Lock-in

Experience seamless onboarding with the flexibility to switch models without long-term commitments.

- Usage-based Billing

Only pay for what you use, optimizing cost without sacrificing access to powerful tools.

- Developer Tools and Infrastructure

Leverage production-grade infrastructure and rich developer tools to ensure robust application performance.

- Distinct Advantage over OpenRouter and AIMLAPI

Enjoy superior provisioning, unified access, and comprehensive support and analytics, differentiating AnyAPI.ai from other model providers.