Advanced AI Language Model with Extended Context and Superior Reasoning

Claude Opus 4.6 is an advanced large language model developed by Anthropic, representing a significant evolution in the Claude model family. As the flagship offering in the Opus tier, this model delivers exceptional reasoning capabilities, extended context understanding, and robust safety alignment for enterprise and production environments.

Claude Opus 4.6 matters because it addresses critical challenges faced by developers building real-time AI applications: the need for nuanced reasoning, long-form document processing, and reliable output quality at scale. Positioned as Anthropic's most capable model, it excels in complex analytical tasks, multi-step problem solving, and sophisticated content generation that requires deep contextual awareness.

For production use cases, Claude Opus 4.6 offers the reliability and performance necessary for customer-facing applications, internal automation systems, and generative AI products that demand consistent quality. Its architecture prioritizes both capability and safety, making it suitable for regulated industries and applications where output accuracy is non-negotiable.

Key Features of Claude Opus 4.6

Extended Context Window

Claude Opus 4.6 supports a context window of up to 1,000,000 tokens, enabling processing of entire codebases, lengthy legal documents, technical manuals, and multi-chapter content in a single request. This extended capacity eliminates the need for complex chunking strategies and preserves contextual coherence across long-form interactions.

Superior Reasoning and Analysis

The model demonstrates advanced reasoning capabilities across mathematical problem solving, logical deduction, causal analysis, and multi-step planning. Claude Opus 4.6 excels at tasks requiring synthesis of information from multiple sources, identification of subtle patterns, and generation of well-structured arguments with supporting evidence.

Enhanced Safety and Alignment

Built with Constitutional AI principles, Claude Opus 4.6 incorporates robust safety measures that reduce harmful outputs, resist jailbreaking attempts, and maintain helpful behavior across diverse use cases. The model demonstrates improved refusal calibration, declining inappropriate requests while remaining maximally helpful for legitimate applications.

Multilingual Proficiency

Claude Opus 4.6 provides strong performance across major world languages including English, Spanish, French, German, Italian, Portuguese, Japanese, Korean, and Mandarin Chinese. This multilingual capability supports global product deployment without requiring separate models for different markets.

Code Generation and Technical Tasks

The model exhibits exceptional coding skills across popular programming languages including Python, JavaScript, TypeScript, Java, C++, Go, and Rust. Claude Opus 4.6 understands complex technical documentation, generates production-ready code with proper error handling, and provides detailed explanations of implementation decisions.

Low Latency for Real-Time Applications

Despite its advanced capabilities, Claude Opus 4.6 maintains response times suitable for interactive applications. Average latency for typical requests ranges from 2 to 5 seconds depending on output length, making it viable for chatbots, live coding assistants, and real-time content generation workflows.

Use Cases for Claude Opus 4.6

Intelligent Customer Support Chatbots

Claude Opus 4.6 powers sophisticated customer support systems that handle complex inquiries requiring product knowledge synthesis, troubleshooting logic, and personalized response generation. The extended context window allows the model to reference entire conversation histories and product documentation simultaneously, delivering accurate resolutions without repetitive clarification requests.

Advanced Code Generation for Development Tools

Development teams integrate Claude Opus 4.6 into IDEs, code review platforms, and AI-assisted programming tools. The model generates complete functions with edge case handling, refactors legacy code while preserving functionality, writes comprehensive test suites, and explains architectural decisions in technical documentation.

Legal and Research Document Analysis

Law firms, research institutions, and compliance teams use Claude Opus 4.6 for contract review, case law analysis, and research synthesis. The model processes hundreds of pages of legal text, identifies relevant precedents, extracts key clauses, and generates structured summaries that highlight critical information and potential risks.

Enterprise Workflow Automation

Organizations deploy Claude Opus 4.6 to automate complex internal processes including report generation, data analysis, email triage, and meeting summarization. The model integrates with CRM systems, project management tools, and business intelligence platforms to transform unstructured information into actionable insights and formatted deliverables.

Knowledge Base Search and Retrieval

Companies implement Claude Opus 4.6 as the reasoning layer for enterprise knowledge systems, enabling employees to query internal documentation, policy databases, and technical wikis using natural language. The model understands nuanced questions, synthesizes information across multiple sources, and provides answers with source citations for verification.

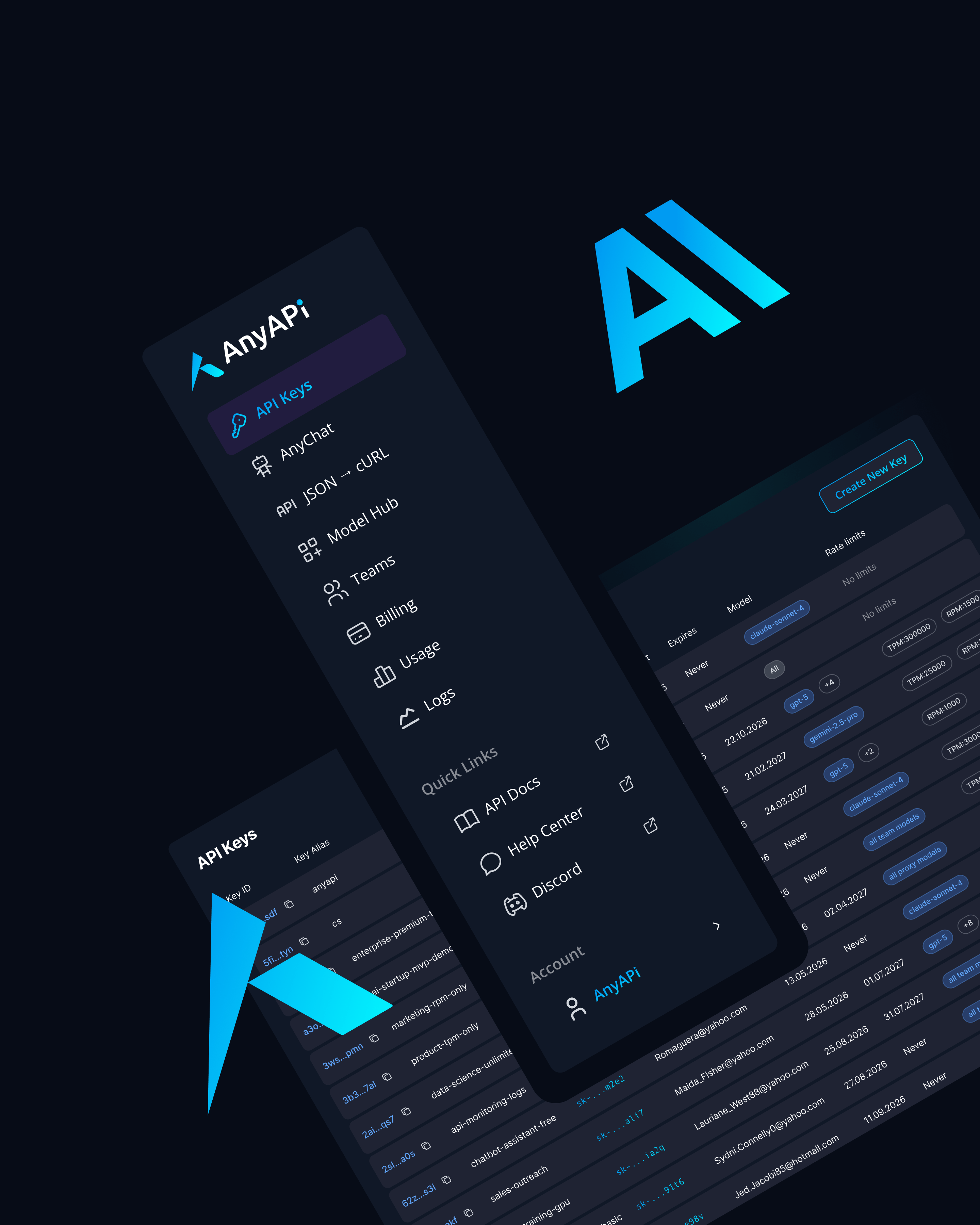

Why Use Claude Opus 4.6 via AnyAPI.ai

AnyAPI.ai provides streamlined access to Claude Opus 4.6 alongside other leading large language models through a unified API interface. This approach eliminates the complexity of managing multiple vendor relationships, separate authentication systems, and incompatible API schemas.

Developers benefit from one-click onboarding that provides immediate access to Claude Opus 4.6 without requiring a separate Anthropic account or navigating vendor-specific approval processes. The unified API structure means code written for one model can be adapted to others with minimal refactoring, preventing vendor lock-in and enabling rapid experimentation.

Usage-based billing through AnyAPI.ai consolidates costs across multiple models into a single invoice with transparent pricing. Teams avoid the overhead of managing separate billing relationships and can allocate budget flexibly across different models based on use case requirements.

The platform provides production-grade infrastructure including automatic failover, request queuing, rate limit management, and detailed analytics dashboards. These developer tools reduce the operational burden of LLM integration and provide visibility into usage patterns, performance metrics, and cost attribution.

Unlike alternatives such as OpenRouter and AIMLAPI, AnyAPI.ai offers superior provisioning with dedicated capacity options for high-volume applications, comprehensive technical support with SLA guarantees, and advanced analytics that track model performance across different use cases and user segments.

Start Using Claude Opus 4.6 via API Today

Claude Opus 4.6 delivers the advanced reasoning, extended context understanding, and robust safety alignment that modern AI applications demand. For startups building differentiated products, development teams integrating LLM capabilities, and enterprises deploying customer-facing AI systems, this model provides the performance and reliability necessary for production environments.

Integrate Claude Opus 4.6 via AnyAPI.ai and start building today. The unified API interface, transparent pricing, and production-grade infrastructure eliminate integration complexity and accelerate time to market. Sign up, get your API key, and launch in minutes with access to Claude Opus 4.6 alongside other leading large language models through a single platform.