High-Performance Chinese-English Large Language Model for Production AI Systems

Kimi K2.5 is a powerful large language model developed by Moonshot AI, designed to deliver exceptional performance in both Chinese and English language processing. As a mid-tier flagship model in the Kimi family, K2.5 bridges the gap between lightweight efficiency and advanced reasoning capabilities, making it particularly relevant for production environments that demand bilingual support and real-time responsiveness.

This model represents a significant advancement for developers building generative AI systems that serve global markets, especially those requiring seamless Chinese-English integration. Kimi K2.5 offers production-grade reliability for real-time applications while maintaining the sophisticated reasoning abilities necessary for complex language tasks. For teams deploying AI-based products across Asian and Western markets, this model provides a strategic advantage through its native bilingual architecture and developer-friendly integration options.

Key Features of Kimi K2.5

Extended Context Processing

Kimi K2.5 supports a substantial context window that enables processing of lengthy documents, extended conversations, and complex multi-turn interactions without losing coherence. This extended context capability allows developers to build applications that maintain conversation history and reference large knowledge bases within a single API call.

Bilingual Language Excellence

The model demonstrates native-level proficiency in both Chinese and English, making it an ideal choice for cross-border applications, international customer support systems, and multilingual content generation. This dual-language strength eliminates the need for separate models or translation layers in bilingual workflows.

Real-Time Response Capabilities

Optimized for low-latency performance, Kimi K2.5 delivers responses quickly enough for interactive applications including live chatbots, real-time translation services, and instant content generation tools. The model architecture prioritizes response speed without sacrificing output quality.

Advanced Reasoning and Alignment

Built with strong alignment protocols and reasoning capabilities, Kimi K2.5 produces logically consistent outputs and follows complex instructions accurately. The model demonstrates enhanced safety measures and reduced hallucination rates compared to earlier generation models.

Flexible Deployment Options

Kimi K2.5 integrates seamlessly through standard REST APIs and supports multiple SDKs, enabling developers to implement the model across diverse technology stacks and deployment environments without significant infrastructure modifications.

Use Cases for Kimi K2.5

Cross-Border Customer Support Chatbots

Kimi K2.5 excels in powering customer support systems for SaaS platforms and e-commerce businesses operating in Chinese and English-speaking markets. The model handles customer inquiries, processes returns, and provides product recommendations while maintaining natural conversation flow in both languages without requiring separate language detection or routing systems.

Bilingual Code Generation and Documentation

Development teams leverage Kimi K2.5 for code generation tasks where documentation and comments need to appear in both Chinese and English. The model assists in creating API documentation, generating boilerplate code, and translating technical specifications while preserving technical accuracy across languages.

Legal and Business Document Summarization

Law firms, consulting companies, and international businesses use Kimi K2.5 to process and summarize contracts, research papers, and business reports written in either Chinese or English. The model extracts key points, identifies critical clauses, and generates executive summaries that maintain the original document's intent and legal precision.

Enterprise Knowledge Base Search

Organizations with multilingual internal documentation deploy Kimi K2.5 to power semantic search across Chinese and English knowledge bases. The model understands queries in one language and retrieves relevant information regardless of the source document language, streamlining information access for distributed teams.

Automated Workflow and Report Generation

Product teams and operations departments integrate Kimi K2.5 into CRM systems and internal tools to automate report generation, meeting summaries, and status updates. The model processes data inputs and generates formatted reports in the preferred language for different stakeholder groups.

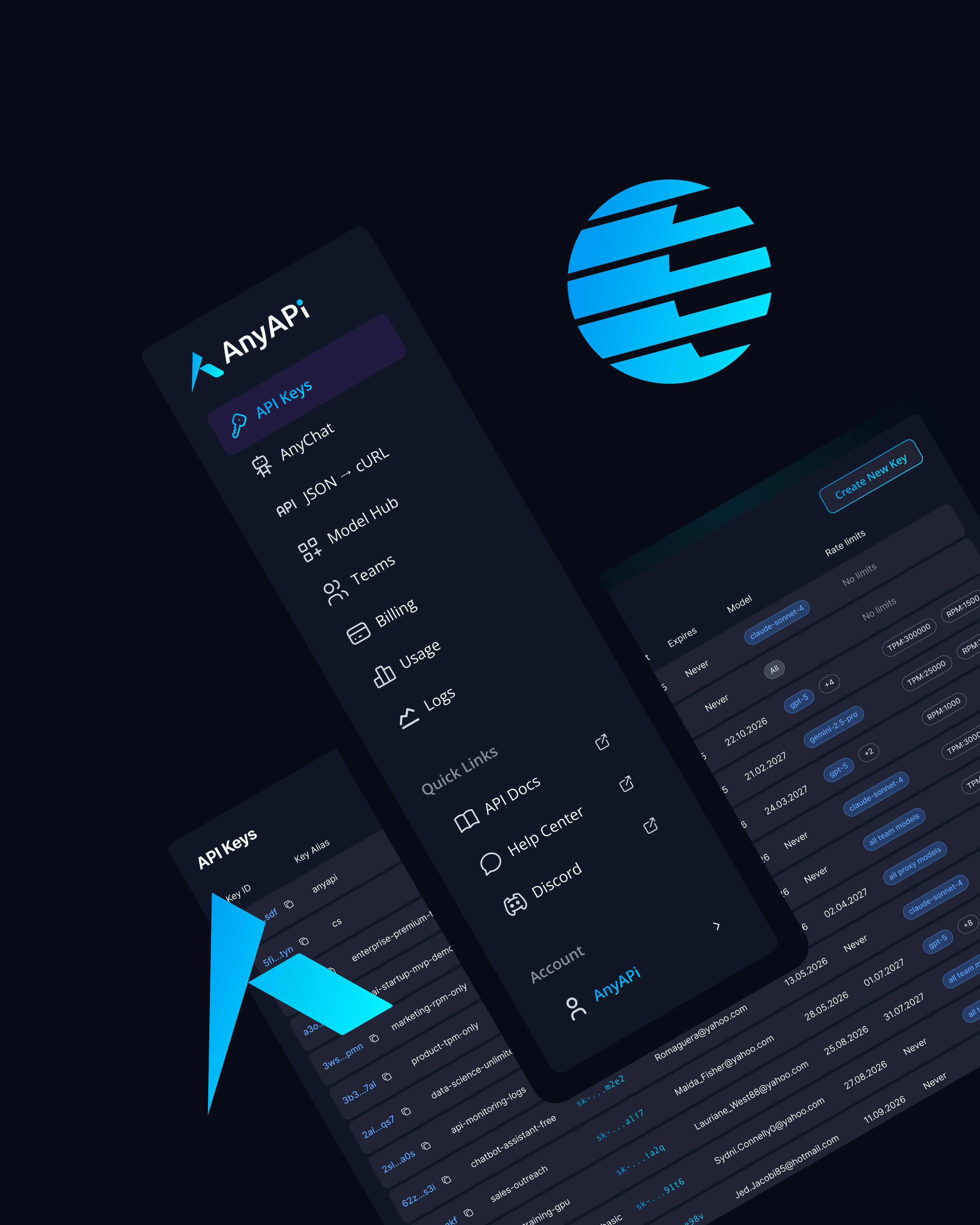

Why Use Kimi K2.5 via AnyAPI.ai

AnyAPI.ai enhances the value proposition of Kimi K2.5 by eliminating the complexity and vendor dependencies typically associated with accessing specialized language models. Through a unified API interface, developers can integrate Kimi K2.5 alongside other leading LLMs without managing multiple vendor relationships or authentication systems.

The platform provides one-click onboarding that removes friction from the integration process. Development teams can access Kimi K2.5 for production use within minutes rather than navigating lengthy approval processes or complex billing arrangements with individual model providers. This streamlined access prevents vendor lock-in and preserves architectural flexibility as project requirements evolve.

Usage-based billing through AnyAPI.ai delivers transparent cost management for Kimi K2.5 implementations. Teams pay only for actual consumption without minimum commitments or complicated tier structures, making it economically viable to experiment with the model during development and scale smoothly into production.

The platform provides production-grade infrastructure including load balancing, automatic failover, and request routing optimizations that improve reliability beyond direct API access. Developer tools such as request logging, performance analytics, and usage dashboards give teams visibility into how Kimi K2.5 performs within their specific applications.

Unlike alternatives such as OpenRouter or AIMLAPI, AnyAPI.ai offers superior provisioning guarantees and unified access management that simplifies governance for enterprise teams. The platform delivers enhanced support resources and comprehensive analytics that help developers optimize their Kimi K2.5 implementations for both performance and cost efficiency.

Start Using Kimi K2.5 via API Today

Kimi K2.5 represents a strategic choice for development teams, startups, and ML infrastructure groups building AI products that serve Chinese and English-speaking markets. The model delivers production-ready performance with the bilingual sophistication necessary for authentic user experiences across both languages.

For organizations scaling AI-based products internationally, Kimi K2.5 via AnyAPI.ai provides immediate access to advanced language model capabilities without vendor lock-in or complex integration requirements. The combination of specialized bilingual performance and unified API access creates a compelling solution for real-time applications, customer-facing tools, and enterprise workflow automation.

Integrate Kimi K2.5 via AnyAPI.ai and start building today. Sign up, get your API key, and launch your bilingual AI application in minutes with the infrastructure and support needed for production success.