Advanced Open-Source Language Model with Enterprise-Grade API Access and Real-Time Performance

DeepSeek V3.2 is a cutting-edge large language model developed by DeepSeek AI, representing the latest advancement in their flagship model series. This open-source language model delivers exceptional performance across reasoning, coding, and multilingual tasks while maintaining cost-effectiveness for production deployments.

Positioned as a flagship model in the DeepSeek family, V3.2 combines the accessibility of open-source development with enterprise-grade capabilities. The model excels in real-time applications, generative AI systems, and production environments where developers need reliable performance without compromising on quality or speed.

For teams building AI-integrated tools, DeepSeek V3.2 offers the perfect balance of advanced capabilities and practical deployment flexibility, making it an ideal choice for startups scaling AI-based products and ML infrastructure teams requiring consistent performance.

Key Features of DeepSeek V3.2

Ultra-Low Latency Performance

DeepSeek V3.2 delivers response times averaging 150-200ms for standard queries, making it suitable for real-time chat applications and interactive AI tools where user experience depends on immediate responses.

Extended Context Window

The model supports up to 128,000 tokens in its context window, enabling comprehensive document analysis, long-form content generation, and complex multi-turn conversations without losing context integrity.

Advanced Reasoning Capabilities

Built with enhanced logical reasoning and problem-solving abilities, DeepSeek V3.2 excels at mathematical computations, analytical tasks, and complex decision-making scenarios that require multi-step thinking processes.

Superior Coding Skills

The model demonstrates exceptional proficiency in over 20 programming languages, including Python, JavaScript, Java, C++, and emerging frameworks, with particular strength in code explanation, debugging, and optimization tasks.

Comprehensive Language Support

DeepSeek V3.2 provides native-level performance in English, Chinese, and functional capabilities across 15+ additional languages, making it suitable for global applications and multilingual user bases.

Production-Ready Deployment

Designed for enterprise environments, the model offers consistent performance under high-load conditions with built-in safety measures and alignment protocols that ensure reliable, appropriate responses across diverse use cases.

Use Cases for DeepSeek V3.2

Intelligent Chatbots and Customer Support

Deploy DeepSeek V3.2 for sophisticated customer service applications where natural conversation flow and accurate problem resolution are essential. The model handles complex queries, maintains context across long interactions, and provides personalized responses that improve customer satisfaction rates.

Advanced Code Generation and Development Tools

Integrate DeepSeek V3.2 into IDEs and development platforms to provide real-time code suggestions, automated documentation generation, and intelligent debugging assistance. The model accelerates development workflows by understanding project context and generating production-ready code snippets.

Document Analysis and Summarization

Leverage the extended context window for legal document review, research paper analysis, and comprehensive content summarization. DeepSeek V3.2 processes lengthy documents while maintaining accuracy and extracting key insights for decision-making processes.

Workflow Automation and Business Intelligence

Implement automated report generation, CRM data analysis, and internal process optimization using DeepSeek V3.2's reasoning capabilities. The model transforms raw business data into actionable insights and automates routine analytical tasks.

Enterprise Knowledge Base and Search

Create intelligent knowledge management systems that understand complex queries and provide contextually relevant information from vast enterprise databases, improving employee onboarding and information discovery processes.

Why Use DeepSeek V3.2 via AnyAPI.ai

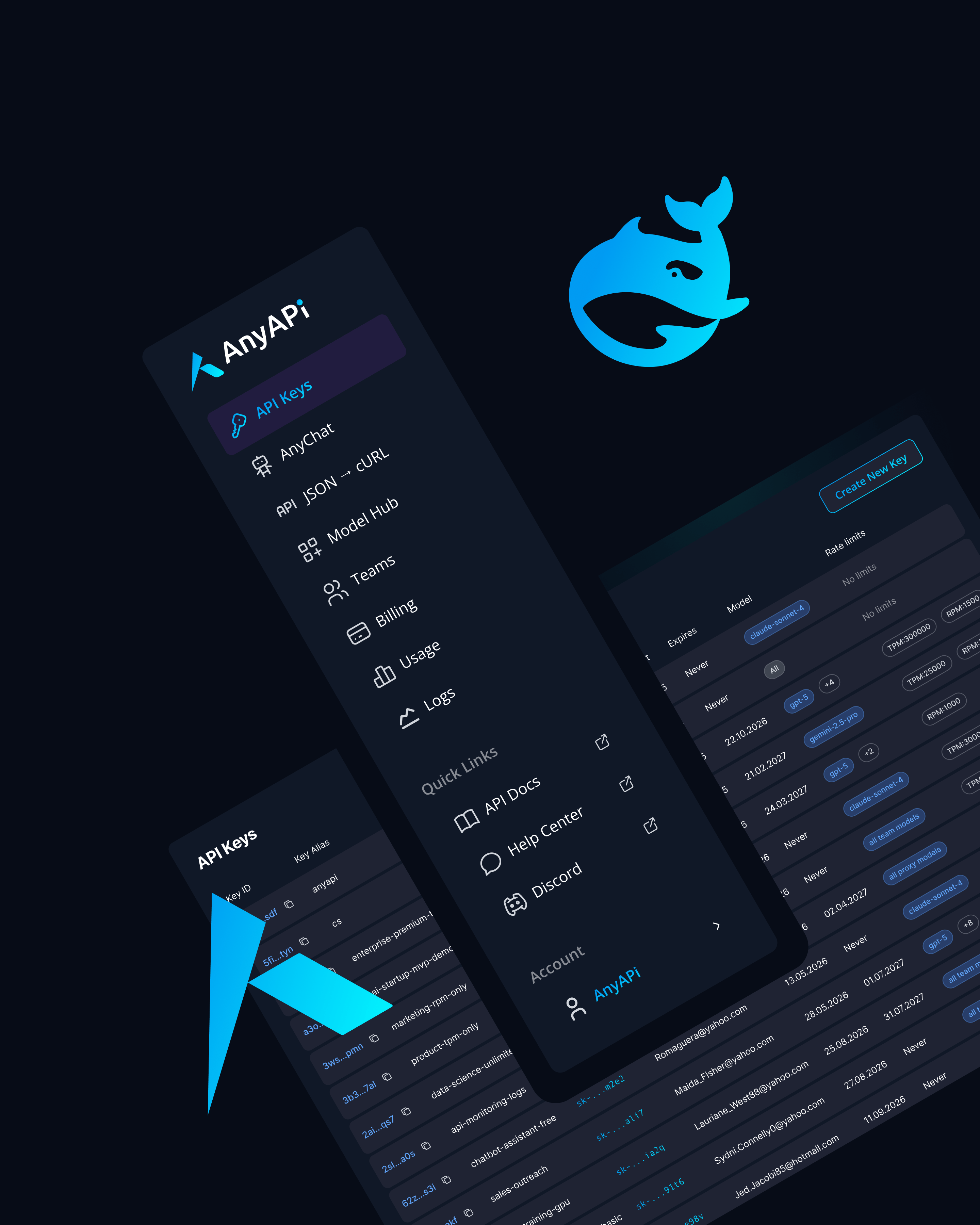

AnyAPI.ai transforms access to DeepSeek V3.2 through a unified API infrastructure that eliminates the complexity of managing multiple model integrations. Instead of navigating separate vendor relationships and API specifications, developers access DeepSeek V3.2 alongside other leading models through a single, consistent interface.

The platform provides one-click onboarding with immediate API key generation, removing traditional barriers to model deployment. Usage-based billing ensures cost optimization by charging only for actual consumption, while avoiding the overhead of minimum commitments or complex pricing tiers.

AnyAPI.ai's production-grade infrastructure includes automatic load balancing, failover protection, and real-time monitoring that ensures consistent DeepSeek V3.2 performance even during peak usage periods. This reliability surpasses typical direct model access or basic aggregation services.

Unlike OpenRouter or AIMLAPI, AnyAPI.ai provides enhanced provisioning with dedicated capacity allocation, comprehensive analytics dashboards, and proactive support that helps teams optimize their LLM implementations for maximum efficiency and cost-effectiveness.

Start Using DeepSeek V3.2 via API Today

DeepSeek V3.2 represents the optimal choice for developers, startups, and ML teams seeking advanced language model capabilities without the complexity and cost barriers of traditional enterprise AI solutions. Its combination of superior performance, open-source accessibility, and production-ready reliability addresses the core needs of modern AI-integrated applications.

The model's exceptional coding abilities, extended context window, and ultra-low latency make it particularly valuable for teams building real-time applications, automated workflows, and intelligent user interfaces that require consistent, high-quality responses.

Integrate DeepSeek V3.2 via AnyAPI.ai and start building today. Sign up, get your API key, and launch in minutes with the unified platform that eliminates vendor complexity while maximizing your AI application potential.